Here is a list of main deliverables of the project; their details are given in the subsequent sections.

- Product

- Documentation

- Product Demo

- Practical Exam Dry Run

- Practical Exam

- Peer testing results

- Peer evaluation

Deliverable: Executable

- The product should be delivered as an executable jar file.

- Ideally, the product delivered at v1.4 should be a

releasable product. However, in the interest of lowering your workload, we do not penalize if the product isnot releasable , as long as the product isacceptance testable .

Deliverable: Source code

- The source code should match the executable, and should include the revision history of the source code, as a Git repo.

Deliverable: User Guide (UG)

- The User Guide (UG) of the product should match the proposed v2.0 of the product and in sync with the current version of the product.

- Features not implemented yet should be clearly marked as

Coming in v2.0 - Ensure the UG matches the product precisely, as it will be used by peer testers (and any inaccuracy in the content will be considered bugs).

Deliverable: Developer Guide (DG)

- The Developer Guide (DG) of the product should match the proposed v2.0 of the product and should be in sync with the current version of the product.

-

The appendix named Instructions for Manual Testing of the Developer Guide should include testing instructions to cover the features of each team member. There is no need to add testing instructions for existing features if you did not touch them.

💡 What to include in the appendix Instructions for Manual Testing? This appendix is meant to give some guidance to the tester to chart a path through the features, and provide some important test inputs the tester can copy-paste into the app. There is no need to give a long list of test cases including all possible variations. It is upto the tester to come up with those variations. However, if the instructions are inaccurate or deliberately misses/mis-states information to make testing harder i.e. annoys the tester, the tester can report it as a bug (because flaws in developer docs are considered as bugs). - Ensure the parted DG parts included in PPPs match the product precisely, as PPPs will be used by peer evaluators (and any inaccuracy in the content will be considered bugs).

Deliverable: Product Website

- Include an updated version of the online UG and DG that match v1.4 executable

- README :

- Ensure the

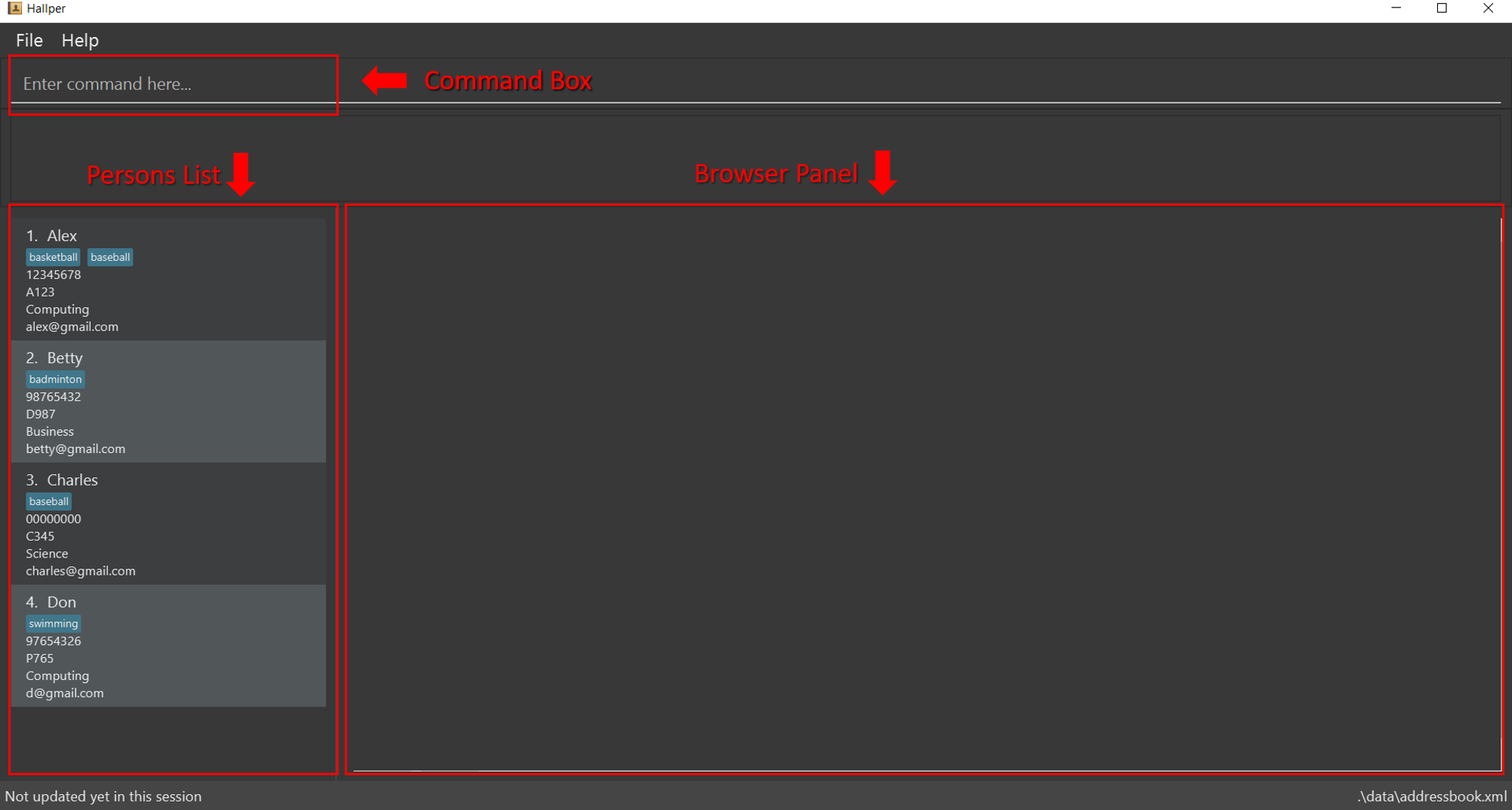

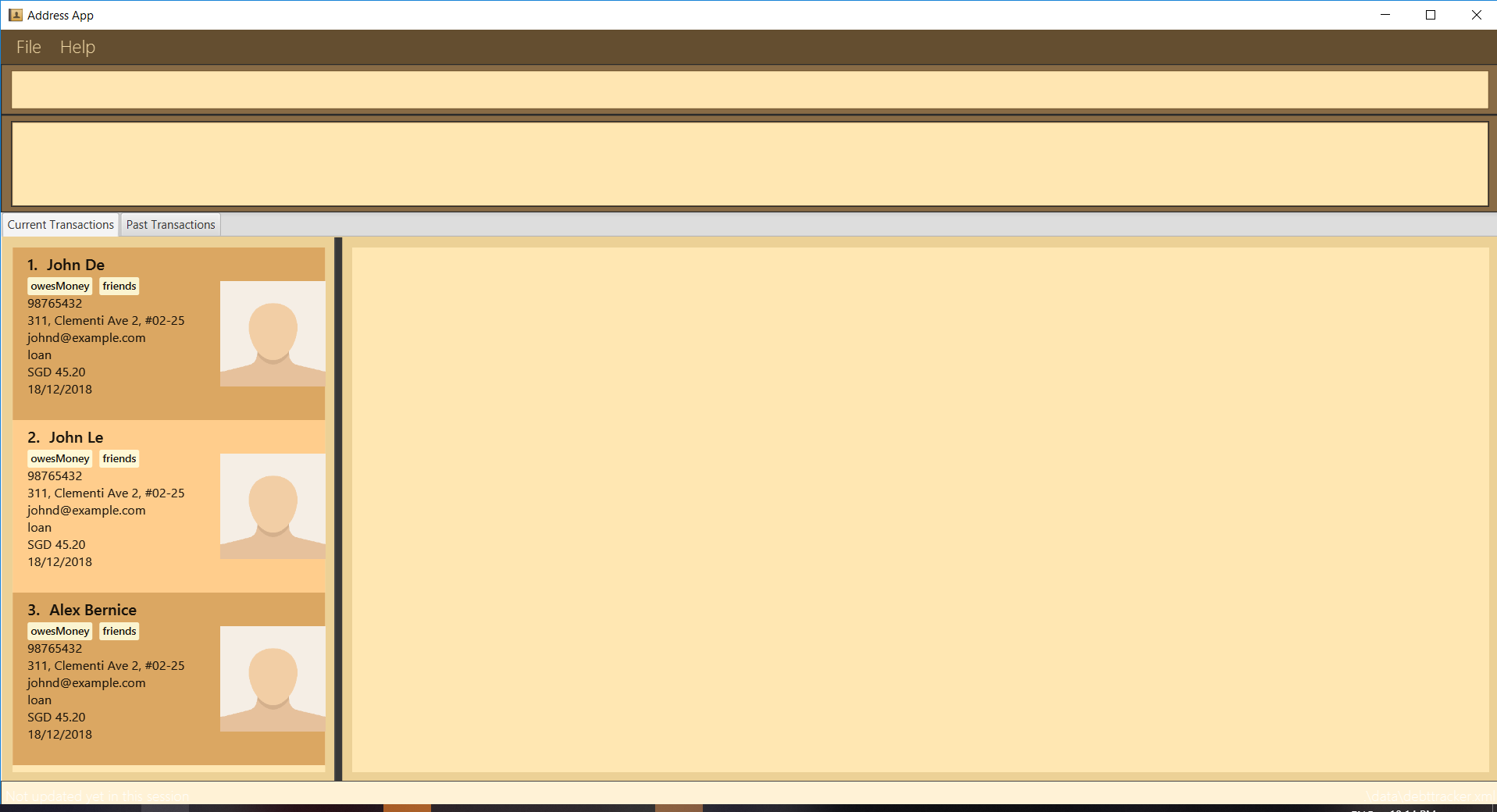

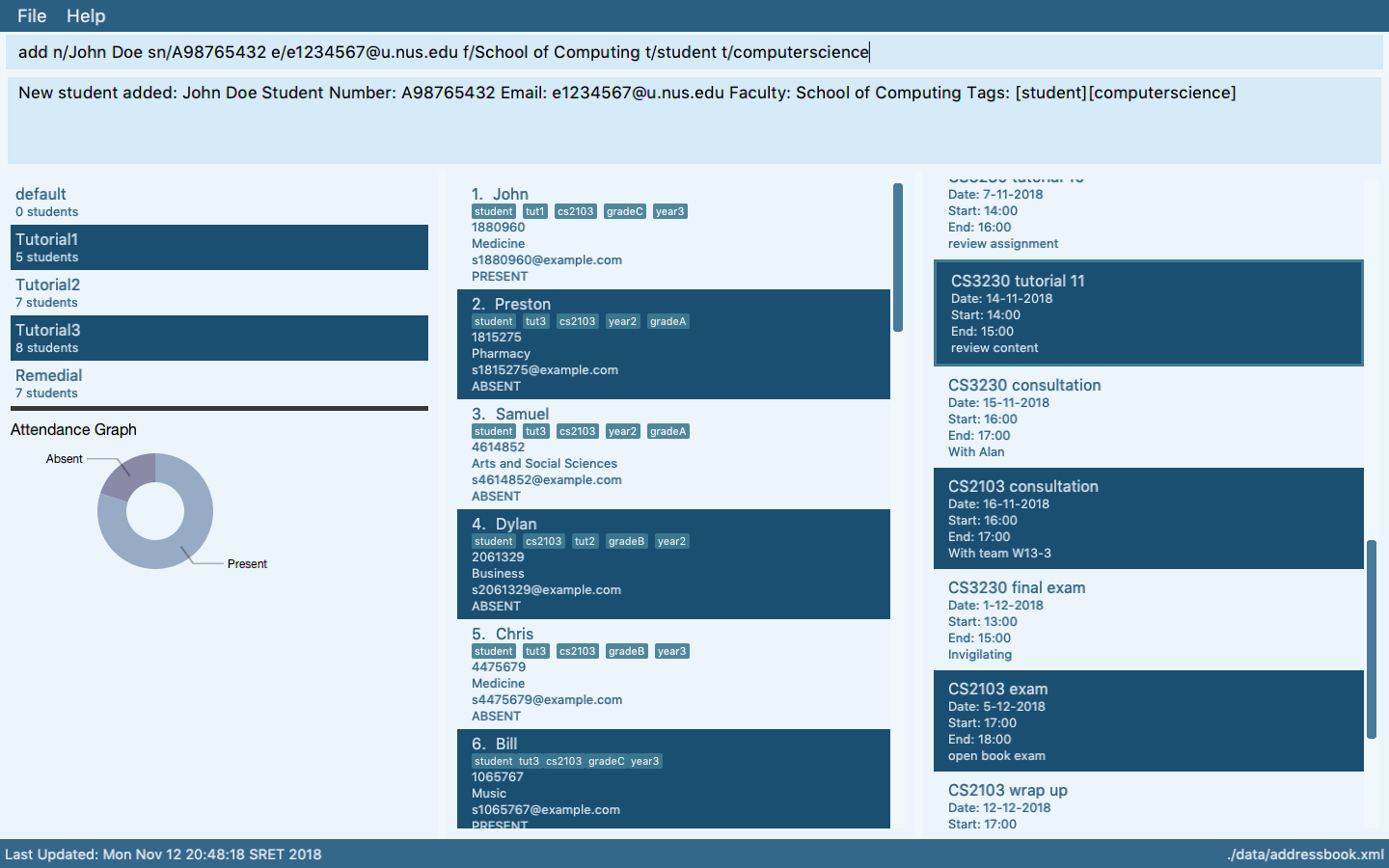

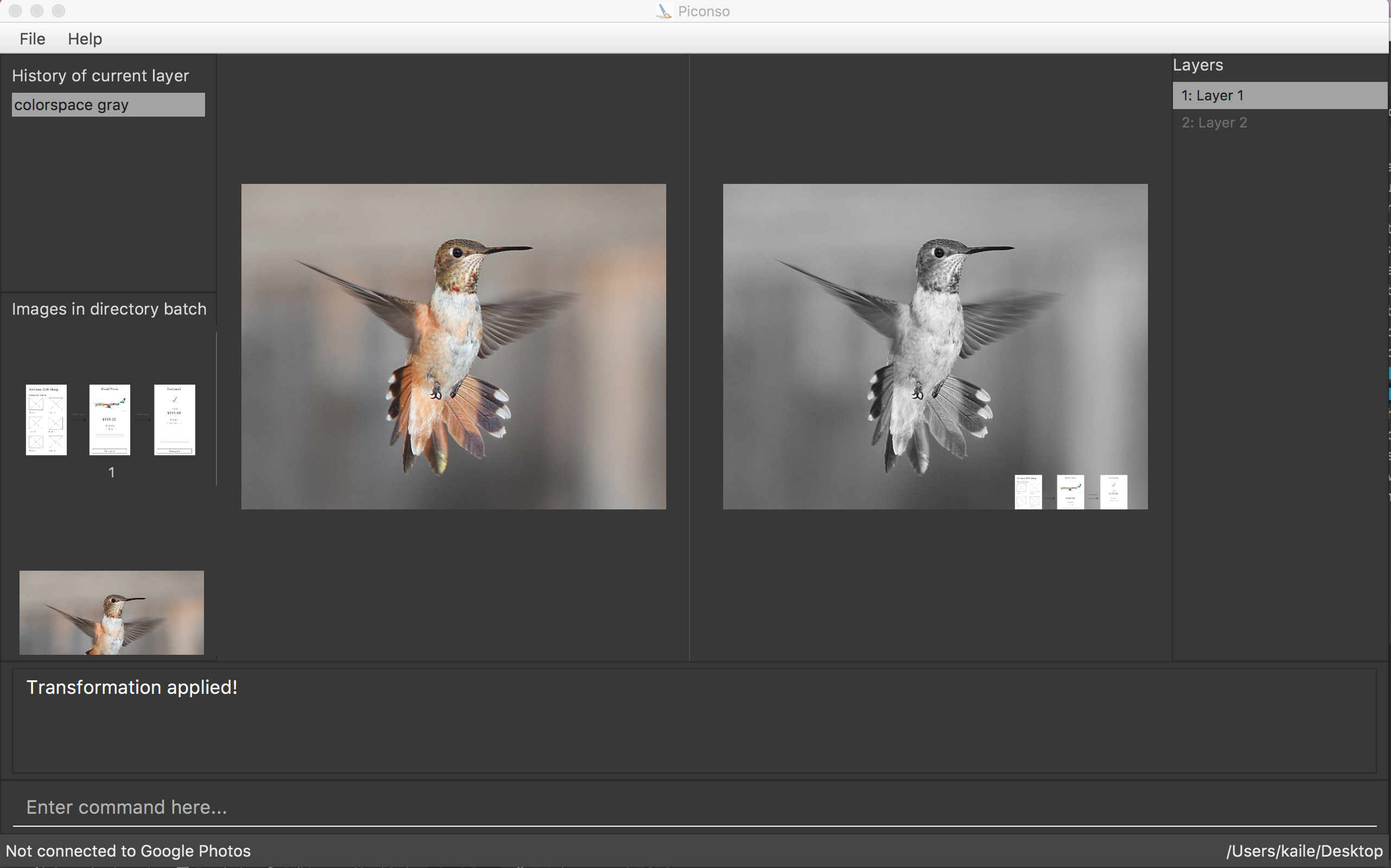

Ui.pngmatches the current product

- Ensure the

💡 Some common sense tips for a good product screenshot

Ui.png represents your product in its full glory.

- Before taking the screenshot, populate the product with data that makes the product look good. For example, if the product is supposed to show photos, use real photos instead of dummy placeholders.

- It should show a state in which the product is well-populated i.e., don't leave data panels largely blank

- Choose a state that showcase the main features of the product i.e., the login screen is not usually a good choice

- Avoid annotations (arrows, callouts, explanatory text etc.); it should look like the product is being in use for real.

- AboutUs : Ensure the following:

- Use a suitable profile photo

-

The purpose of the profile photo is for the teaching team to identify you. Therefore, you should choose a recent individual photo showing your face clearly (i.e., not too small) -- somewhat similar to a passport photo. Some examples can be seen in the 'Teaching team' page. Given below are some examples of good and bad profile photos.

-

If you are uncomfortable posting your photo due to security reasons, you can post a lower resolution image so that it is hard for someone to misuse that image for fraudulent purposes. If you are concerned about privacy, you can request permission to omit your photo from the page by writing to prof.

- Contains a link to each person's Project Portfolio page

- Team member names match full names used by LumiNUS

Deliverable: Project Portfolio Page (PPP)

At the end of the project each student is required to submit a Project Portfolio Page.

-

Objective:

- For you to use (e.g. in your resume) as a well-documented data point of your SE experience

- For us to use as a data point to evaluate your,

- contributions to the project

- your documentation skills

-

Sections to include:

-

Overview: A short overview of your product to provide some context to the reader.

-

Summary of Contributions:

- Code contributed: Give a link to your code on Project Code Dashboard, which should be

https://nus-cs2103-ay1819s2.github.io/cs2103-dashboard/#=undefined&search=githbub_username_in_lower_case(replacegithbub_username_in_lower_casewith your actual username in lower case e.g.,johndoe). This link is also available in the Project List Page -- linked to the icon under your photo. - Features implemented: A summary of the features you implemented. If you implemented multiple features, you are recommended to indicate which one is the biggest feature.

- Other contributions:

- Contributions to project management e.g., setting up project tools, managing releases, managing issue tracker etc.

- Evidence of helping others e.g. responses you posted in our forum, bugs you reported in other team's products,

- Evidence of technical leadership e.g. sharing useful information in the forum

- Code contributed: Give a link to your code on Project Code Dashboard, which should be

-

Contributions to the User Guide: Reproduce the parts in the User Guide that you wrote. This can include features you implemented as well as features you propose to implement.

The purpose of allowing you to include proposed features is to provide you more flexibility to show your documentation skills. e.g. you can bring in a proposed feature just to give you an opportunity to use a UML diagram type not used by the actual features. -

Contributions to the Developer Guide: Reproduce the parts in the Developer Guide that you wrote. Ensure there is enough content to evaluate your technical documentation skills and UML modelling skills. You can include descriptions of your design/implementations, possible alternatives, pros and cons of alternatives, etc.

-

If you plan to use the PPP in your Resume, you can also include your SE work outside of the module (will not be graded)

-

-

Format:

-

File name:

docs/team/githbub_username_in_lower_case.adoce.g.,docs/team/johndoe.adoc -

Follow the example in the AddressBook-Level4

-

💡 You can use the Asciidoc's

includefeature to include sections from the developer guide or the user guide in your PPP. Follow the example in the sample. -

It is assumed that all contents in the PPP were written primarily by you. If any section is written by someone else e.g. someone else wrote described the feature in the User Guide but you implemented the feature, clearly state that the section was written by someone else (e.g.

Start of Extract [from: User Guide] written by Jane Doe). Reason: Your writing skills will be evaluated based on the PPP

-

-

Page limit:

Content Limit Overview + Summary of contributions 0.5-1 (soft limit) Contributions to the User Guide 1-3 (soft limit) Contributions to the Developer Guide 3-6 (soft limit) Total 5-10 (strict) - The page limits given above are after converting to PDF format. The actual amount of content you require is actually less than what these numbers suggest because the HTML → PDF conversion adds a lot of spacing around content.

- Reason for page limit: These submissions are peer-graded (in the PE) which needs to be done in a limited time span.

If you have more content than the limit given above, you can give a representative samples of UG and DG that showcase your documentation skills. Those samples should be understandable on their own. For the parts left-out, you can give an abbreviated version and refer the reader to the full UG/DG for more details.

It's similar to giving extra details as appendices; the reader will look at the UG/DG if the PPP is not enough to make a judgment. For example, when judging documentation quality, if the part in the PPP is not well-written, there is no point reading the rest in the main UG/DG. That's why you need to put the most representative part of your writings in the PPP and still give an abbreviated version of the rest in the PPP itself. Even when judging the quantity of work, the reader should be able to get a good sense of the quantity by combining what is quoted in the PPP and your abbreviated description of the missing part. There is no guarantee that the evaluator will read the full document.

Deliverable: Demo

-

Duration: Strictly 18 minutes for a 5-person team, 15 minutes for a 4-person team, and 12 minutes for a 3-person team. Exceeding this limit will be penalized. Any set up time will be taken out of your allocated time.

-

Target audience: Assume you are giving a demo to a higher-level manager of your company, to brief him/her on the current capabilities of the product. This is the first time they are seeing the new product you developed but they are familiar with the AddressBook-level4 (AB4) product. The actual audience are the evaluators (the team supervisor and another tutor).

-

Scope:

- Each person should demo the enhancements they added. However, it's ok for one member to do all the typing.

- Subjected to the constraint mentioned in the previous point, as far as possible, organize the demo to present a cohesive picture of the product as a whole, presented in a logical order. Remember to explain the profile of the target user profile and value proposition early in the demo.

- It is recommended you showcase how the feature improves the user’s life rather than simply describe each feature.

- No need to cover design/implementation details as the manager is not interested in those details.

- Mention features you inherited from AB4 only if they are needed to explain your new features. Reason: existing features will not earn you marks, and the audience is already familiar with AB4 features.

- Each person should demo their features.

-

Structure:

- Demo the product using the same executable you submitted, on your own laptop, using the TV.

- It can be a sitting down demo: You'll be demonstrating the features using the TV while sitting down. But you may stand around the TV if you prefer that way.

- It will be an uninterrupted demo: The audience members will not interrupt you during the demo. That means you should finish within the given time.

- The demo should use a sufficient amount of

realistic demo data. e.g at least 20 contacts. Trying to demo a product using just 1-2 sample data creates a bad impression. - Dress code : The level of formality is up to you, but it is recommended that the whole team dress at the same level.

-

Optimizing the time:

- Spend as much time as possible on demonstrating the actual product. Not recommended to use slides (if you do, use them sparingly) or videos or lengthy narrations.

Avoid skits, re-enactments, dramatizations etc. This is not a sales pitch or an informercial. While you need to show how a user use the product to get value, but you don’t need to act like an imaginary user. For example, [Instead of this]Jim get’s a call from boss. "Ring ring", "hello", "oh hi Jim, can we postpone the meeting?" "Sure". Jim hang up and curses the boss under his breath. Now he starts typing ..etc.[do this]If Jim needs to postpone the meeting, he can type …It’s not that dramatization is bad or we don’t like it. We simply don’t have enough time for it.

Note that CS2101 demo requirements may differ. Different context → Different requirements. - Rehearse the steps well and ensure you can do a smooth demo. Poor quality demos can affect your grade.

- Don’t waste time repeating things the target audience already knows. e.g. no need to say things like "We are students from NUS, SoC".

- Plan the demo to be in sync with the impression you want to create. For example, if you are trying to convince that the product is easy to use, show the easiest way to perform a task before you show the full command with all the bells and whistles.

- Spend as much time as possible on demonstrating the actual product. Not recommended to use slides (if you do, use them sparingly) or videos or lengthy narrations.

-

Special circumstances:

- If a significant feature was not merged on time: inform the tutor and get permission to show the unmerged feature using your own version of the code. Obviously, unmerged features earn much less marks than a merged equivalent but something is better than nothing.

- If you have no user visible features to show, you can still contribute to the demo by giving an overview of the product (at the start) and/or giving a wrap of of the product (at the end).

- If you are unable to come to the demo due to a valid reason, you can ask a team member to demo your feature. Remember to submit the evidence of your excuse e.g., MC to prof. The demo is part of module assessment and absence without a valid reason will cause you to lose marks.

Deliverable: Practical Exam (Dry Run)

What: The v1.3 is subjected to a round of peer acceptance/system testing, also called the Practical Exam Dry Run as this round of testing will be similar to the graded

When, where: week 11 at lecture

Objectives:

- The primary objective of the PE is to increase the rigor of project assessment. Assessing most aspects of the project involves an element subjectivity. As the project counts for 50% of the final grade, it is not prudent to rely on evaluations of tutors alone as there can be significant variations between how different tutors assess projects. That is why we collect more data points via the PE so as to minimize the chance of your project being affected by evaluator-bias.

- Note that none of the significant project grade components are calculated solely based on peer ratings. Rather, PE data are mostly used to cross-validate tutor assessments and identify cases that need further investigation. When peer inputs are used for grading, usually they are combined with tutor evaluations with appropriate weight for each. In some cases ratings from team members are given a higher weight compared to ratings from other teams, if that is appropriate.

- As a bonus, PE also gives us an opportunity to evaluate your manual testing skills, product evaluation skills, effort estimation skills etc.

- Note that the PE is not a means of pitting you against each other. Developers and testers play for the same side; they need to push each other to improve the quality of their work -- not bring down each other.

When, where: Week 13 lecture

Grading:

- Your performance in the practical exam will affect your final grade and your peers, as explained in Admin: Project Assessment section.

- As your submissions can affect the grades of peers, note that we have put in measures to identify insincere/random evaluations and penalize accordingly.

Preparation:

-

Ensure that you can access the relevant issue tracker given below:

-- for PE Dry Run (at v1.3): nus-cs2103-AY1819S2/pe-dry-run

-- for PE (at v1.4): nus-cs2103-AY1819S2/pe (will open only near the actual PE)- These are private repos!. If you cannot access the relevant repo, you may not have accepted the invitation to join the GitHub org used by the module. Go to https://github.com/orgs/nus-cs2103-AY1819S2/invitation to accept the invitation.

- If you cannot find the invitation, post in our forum.

-

Ensure you have access to a computer that is able to run module projects e.g. has the right Java version.

-

Have a good screen grab tool with annotation features so that you can quickly take a screenshot of a bug, annotate it, and post in the issue tracker.

- 💡 You can use Ctrl+V to paste a picture from the clipboard into a text box in GitHub issue tracker.

-

Charge your computer before coming to the PE session. The testing venue may not have enough charging points.

During:

- Take note of your team to test. It will be given to you by the teaching team (distributed via LumiNUS gradebook).

- Download from LumiNUS all files submitted by the team (i.e. jar file, User Guide, Developer Guide, and Project Portfolio Pages) into an empty folder.

- [60 minutes] Test the product and report bugs as described below:

Testing instructions for PE and PE Dry Run

-

What to test:

- PE Dry Run (at v1.3):

- Test the product based on the User Guide (the UG is most likely accessible using the

helpcommand). - Do system testing first i.e., does the product work as specified by the documentation?. If there is time left, you can do acceptance testing as well i.e., does the product solve the problem it claims to solve?.

- Test the product based on the User Guide (the UG is most likely accessible using the

- PE (at v1.4):

- Test based on the Developer Guide (Appendix named Instructions for Manual Testing) and the User Guide. The testing instructions in the Developer Guide can provide you some guidance but if you follow those instructions strictly, you are unlikely to find many bugs. You can deviate from the instructions to probe areas that are more likely to have bugs.

- As before, do both system testing and acceptance testing but give priority to system testing as system testing bugs can earn you more credit.

- PE Dry Run (at v1.3):

-

These are considered bugs:

- Behavior differs from the User Guide

- A legitimate user behavior is not handled e.g. incorrect commands, extra parameters

- Behavior is not specified and differs from normal expectations e.g. error message does not match the error

- The feature does not solve the stated problem of the intended user i.e., the feature is 'incomplete'

- Problems in the User Guide e.g., missing/incorrect info

-

Where to report bugs: Post bug in the following issue trackers (not in the team's repo):

- PE Dry Run (at v1.3): nus-cs2103-AY1819S2/pe-dry-run.

- PE (at v1.4): nus-cs2103-AY1819S2/pe.

-

Bug report format:

- Post bugs as you find them (i.e., do not wait to post all bugs at the end) because the issue tracker will close exactly at the end of the allocated time.

- Do not use team ID in bug reports. Reason: to prevent others copying your bug reports

- Write good quality bug reports; poor quality or incorrect bug reports will not earn credit.

- Use a descriptive title.

- Give a good description of the bug with steps to reproduce and screenshots.

- Assign a severity to the bug report. Bug report without a priority label are considered

severity.Low(lower severity bugs earn lower credit): - Each bug should be a separate issue. The issue will be initialized with one of the following templates:

Bug report

Describe the bug A clear and concise description of what the bug is.

To Reproduce Steps to reproduce the behavior:

- Go to '...'

- Click on '....'

- Scroll down to '....'

- See error

Expected behavior A clear and concise description of what you expected to happen.

Screenshots If applicable, add screenshots to help explain your problem.

Additional context Add any other context about the problem here.

Suggestions for improving the product

Please describe the problem A clear and concise description of what the problem is. Eg. I have to scroll three times to find [...]

Describe the improvement you'd like to suggest A clear and concise description of what you want to happen. Eg., Better to sort based on [...] for quick access

Additional context Add any other context or screenshots about the feature request here.

Bug Severity labels:

severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

-

About posting suggestions:

- PE Dry Run (at v1.3): You can also post suggestions on how to improve the product. 💡 Be diplomatic when reporting bugs or suggesting improvements. For example, instead of criticising the current behavior, simply suggest alternatives to consider.

- PE (at v1.4): Do not post suggestions. But if a feature is missing a critical functionality that makes the feature less useful to the intended user, it can be reported as a bug.

-

If the product doesn't work at all: If the product fails catastrophically e.g., cannot even launch, you can test the fallback team allocated to you. But in this case you must inform us immediately after the session so that we can send your bug reports to the correct team.

- [Remainder of the session] Evaluate the following aspects. Note down your evaluation in a hard copy (as a backup). Submit via TEAMMATES. You are recommended to complete this during the PE session but you have until the end of the day to submit (or revise) your submissions.

- A. Product Design []:

Evaluate the product design based on how the product V2.0 (not V1.4) is described in the User Guide.

-

unable to judge: You are unable to judge this aspect for some reason e.g., UG is not available or does not have enough information. -

Target user:

-

target user specified and appropriate: The target user is clearly specified, prefers typing over other modes of input, and not too general (should be narrowed to a specific user group with certain characteristics). -

value specified and matching: The value offered by the product is clearly specified and matches the target user.

-

-

Value to the target user:

-

value: low: The value to target user is low. App is not worth using. -

value: medium: Some small group of target users might find the app worth using. -

value: high: Most of the target users are likely to find the app worth using.

-

-

Feature-fit:

-

feature-fit: low: Features don't seem to fit together. -

feature-fit: medium: Some features fit together but some don't. -

feature-fit: high: All features fit together.

-

-

polished: The product looks well-designed.

- B. Quality of user docs []:

Evaluate the quality of user documentation based on the parts of the user guide written by the person, as reproduced in the project portfolio. Evaluate from an end-user perspective.

-

UG/ unable to judge: Less than 1 page worth of UG content written by the student or cannot find PPP -

UG/ good use of visuals: Uses visuals e.g., screenshots. -

UG/ good use of examples: Uses examples e.g., sample inputs/outputs. -

UG/ just enough information: Not too much information. All important information is given. -

UG/ easy to understand: The information is easy to understand for the target audience. -

UG/ polished: The document looks neat, well-formatted, and professional.

- C. Quality of developer docs []:

Evaluate the quality of developer documentation based on the developer docs cited/reproduced in the respective project portfolio page. Evaluate from the perspective of a new developer trying to understand how the features are implemented.

-

DG/ unable to judge: Less than 0.5 pages worth of content OR other problems in the document e.g. looks like included wrong content. -

DG/ too little: 0.5 - 1 page of documentation - Diagrams:

-

DG/ types of UML diagrams: 1: Only one type of diagram used (types: Class Diagrams, Object Diagrams, Sequence Diagrams, Activity Diagrams, Use Case Diagrams) -

DG/ types of UML diagrams: 2: Two types of diagrams used -

DG/ types of UML diagrams: 3+: Three or more types of diagrams used -

DG/ UML diagrams suitable: The diagrams used for the right purpose -

DG/ UML notation correct: No more than one minor error in the UML notation -

DG/ diagrams not repetitive: No evidence of repeating the same diagram with minor differences -

DG/ diagrams not too complicated: Diagrams don't cram too much information into them -

DG/ diagrams integrates with text: Diagrams are well integrated into the textual explanations

-

-

DG/ easy to understand: The document is easy to understand/follow -

DG/ just enough information: Not too much information. All important information is given. -

DG/ polished: The document looks neat, well-formatted, and professional.

- D. Feature Quality []:

Evaluate the biggest feature done by the student for difficulty, completeness, and testability. Note: examples given below assume that AB4 did not have the commands edit, undo, and redo.

- Difficulty

-

Feature/ difficulty: unable to judge: You are unable to judge this aspect for some reason. -

Feature/ difficulty: low: e.g. make the existing find command case insensitive. -

Feature/ difficulty: medium: e.g. an edit command that requires the user to type all fields, even the ones that are not being edited. -

Feature/ difficulty: high: e.g., undo/redo command

-

- Completeness

-

Feature/ completeness: unable to judge: You are unable to judge this aspect for some reason. -

Feature/ completeness: low: A partial implementation of the feature. Barely useful. -

Feature/ completeness: medium: The feature has enough functionality to be useful for some of the users. -

Feature/ completeness: high: The feature has all functionality to be useful to almost all users.

-

- Other

-

Feature/ not hard to test: The feature was not too hard to test manually. -

Feature/ polished: The feature looks polished (as if done by a professional programmer).

-

- E. Amount of work []:

Evaluate the amount of work, on a scale of 0 to 30.

- Consider this PR (

historycommand) as 5 units of effort which means this PR (undo/redocommand) is about 15 points of effort. Given that 30 points matches an effort twice as that needed for theundo/redofeature (which was given as an example of anAgrade project), we expect most students to be have efforts lower than 20. - Count all implementation/testing/documentation work as mentioned in that person's PPP. Also look at the actual code written by the person.

- Do not give a high value just to be nice. You will be asked to provide a brief justification for your effort estimates.

Processing PE Bug Reports:

There will be a review period for you to respond to the bug reports you received.

Duration: The review period will start around 1 day after the PE (exact time to be announced) and will last until the following Monday midnight. However, you are recommended to finish this task ASAP, to minimize cutting into your exam preparation work.

Bug reviewing is recommended to be done as a team as some of the decisions need team consensus.

Instructions for Reviewing Bug Reports

-

First, don't freak out if there are lot of bug reports. Many can be duplicates and some can be false positives. In any case, we anticipate that all of these products will have some bugs and our penalty for bugs is not harsh. Furthermore, it depends on the severity of the bug. Some bug may not even be penalized.

-

Do not edit the subject or the description. Your response (if any) should be added as a comment.

-

You may (but not required to) close the bug report after you are done processing it, as a convenient means of separating the 'processed' issues from 'not yet processed' issues.

-

If the bug is reported multiple times, mark all copies EXCEPT one as duplicates using the

duplicatetag (if the duplicates have different severity levels, you should keep the one with the highest severity). In addition, use this technique to indicate which issue they are duplicates of. Duplicates can be omitted from processing steps given below. -

If a bug seems to be for a different product (i.e. wrongly assigned to your team), let us know (email prof).

-

Decide if it is a real bug and apply ONLY one of these labels.

Response Labels:

response.Accepted: You accept it as a bug.response.NotInScope: It is a valid issue but not something the team should be penalized for e.g., it was not related to features delivered in v1.4.response.Rejected: What tester treated as a bug is in fact the expected behavior. You can reject bugs that you inherited from AB4.response.CannotReproduce: You are unable to reproduce the behavior reported in the bug after multiple tries.response.IssueUnclear: The issue description is not clear. Don't post comments asked the tester to give more info. The tester will not be able to see those comments because the bug reports are anonymized.

- If applicable, decide the type of bug. Bugs without

type.*are consideredtype.FunctionalityBugby default (which are liable to a heavier penalty).

Bug Type Labels:

type.FeatureFlaw: some functionality missing from a feature delivered in v1.4 in a way that the feature becomes less useful to the intended target user for normal usage. i.e., the feature is not 'complete'. In other words, an acceptance testing bug that falls within the scope of v1.4 features. These issues are counted against the 'depth and completeness' of the feature.type.FunctionalityBug: the bug is a flaw in how the product works.type.DocTypo: A minor spelling/grammar error in the documentation. Does not affect the user.type.DocumentationBug: A flaw in the documentation that can potentially affect the user e.g., a missing step, a wrong instruction

- If you disagree with the original severity assigned to the bug, you may change it to the correct level.

For all cases of downgrading severity or non-acceptance of a bug, you must add a comment justifying your stance. All such cases will be double-checked by the teaching team and indiscriminate downgrading/non-acceptance of bugs without a good justification, if deemed as a case of trying to game the system, may be penalized as it is unfair to the tester.

Bug Severity labels:

severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

-

Decide who should fix the bug. Use the

Assigneesfield to assign the issue to that person(s). There is no need to actually fix the bug though. It's simply an indication/acceptance of responsibility. If there is no assignee, we will distribute the penalty for that bug (if any) among all team members. -

We recommend (but not enforce) that the feature owner should be assigned bugs related to the feature, even if the bug was caused indirectly by someone else. Reason: The feature owner should have defended the feature against bugs using automated tests and defensive coding techniques.

-

Add an explanatory comment explaining your choice of labels and assignees.

-

We recommend choosing

type.*,severity.*and assignee even for bugs you are not accepting. Reason: your non-acceptance may be rejected by the tutor later, in which case we need to grade it as an accepted bug.

Grading: Taking part in the PE dry run is strongly encouraged as it can affect your grade in the following ways.

- If the product you are allocated to test in the Practical Exam (at v1.4) had a very low bug count, we will consider your performance in PE dry run as well when grading the PE.

- PE dry run will help you practice for the actual PE.

- Taking part in the PE dry run will earn you participation points.

- There is no penalty for bugs reported in your product. Every bug you find is a win-win for you and the team whose product you are testing.

Objectives:

- To train you to do manual testing, bug reporting, bug

triaging, bug fixing, communicating with users/testers/developers, evaluating products etc. - To help you improve your product before the final submission.

Preparation:

-

Ensure that you can access the relevant issue tracker given below:

-- for PE Dry Run (at v1.3): nus-cs2103-AY1819S2/pe-dry-run

-- for PE (at v1.4): nus-cs2103-AY1819S2/pe (will open only near the actual PE)- These are private repos!. If you cannot access the relevant repo, you may not have accepted the invitation to join the GitHub org used by the module. Go to https://github.com/orgs/nus-cs2103-AY1819S2/invitation to accept the invitation.

- If you cannot find the invitation, post in our forum.

-

Ensure you have access to a computer that is able to run module projects e.g. has the right Java version.

-

Have a good screen grab tool with annotation features so that you can quickly take a screenshot of a bug, annotate it, and post in the issue tracker.

- 💡 You can use Ctrl+V to paste a picture from the clipboard into a text box in GitHub issue tracker.

-

Charge your computer before coming to the PE session. The testing venue may not have enough charging points.

During the session:

- Take note of your team to test. Distributed via LumiNUS gradebook.

- Download the latest jar file from the team's GitHub page. Copy it to an empty folder.

Testing instructions for PE and PE Dry Run

-

What to test:

- PE Dry Run (at v1.3):

- Test the product based on the User Guide (the UG is most likely accessible using the

helpcommand). - Do system testing first i.e., does the product work as specified by the documentation?. If there is time left, you can do acceptance testing as well i.e., does the product solve the problem it claims to solve?.

- Test the product based on the User Guide (the UG is most likely accessible using the

- PE (at v1.4):

- Test based on the Developer Guide (Appendix named Instructions for Manual Testing) and the User Guide. The testing instructions in the Developer Guide can provide you some guidance but if you follow those instructions strictly, you are unlikely to find many bugs. You can deviate from the instructions to probe areas that are more likely to have bugs.

- As before, do both system testing and acceptance testing but give priority to system testing as system testing bugs can earn you more credit.

- PE Dry Run (at v1.3):

-

These are considered bugs:

- Behavior differs from the User Guide

- A legitimate user behavior is not handled e.g. incorrect commands, extra parameters

- Behavior is not specified and differs from normal expectations e.g. error message does not match the error

- The feature does not solve the stated problem of the intended user i.e., the feature is 'incomplete'

- Problems in the User Guide e.g., missing/incorrect info

-

Where to report bugs: Post bug in the following issue trackers (not in the team's repo):

- PE Dry Run (at v1.3): nus-cs2103-AY1819S2/pe-dry-run.

- PE (at v1.4): nus-cs2103-AY1819S2/pe.

-

Bug report format:

- Post bugs as you find them (i.e., do not wait to post all bugs at the end) because the issue tracker will close exactly at the end of the allocated time.

- Do not use team ID in bug reports. Reason: to prevent others copying your bug reports

- Write good quality bug reports; poor quality or incorrect bug reports will not earn credit.

- Use a descriptive title.

- Give a good description of the bug with steps to reproduce and screenshots.

- Assign a severity to the bug report. Bug report without a priority label are considered

severity.Low(lower severity bugs earn lower credit): - Each bug should be a separate issue. The issue will be initialized with one of the following templates:

Bug report

Describe the bug A clear and concise description of what the bug is.

To Reproduce Steps to reproduce the behavior:

- Go to '...'

- Click on '....'

- Scroll down to '....'

- See error

Expected behavior A clear and concise description of what you expected to happen.

Screenshots If applicable, add screenshots to help explain your problem.

Additional context Add any other context about the problem here.

Suggestions for improving the product

Please describe the problem A clear and concise description of what the problem is. Eg. I have to scroll three times to find [...]

Describe the improvement you'd like to suggest A clear and concise description of what you want to happen. Eg., Better to sort based on [...] for quick access

Additional context Add any other context or screenshots about the feature request here.

Bug Severity labels:

severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

-

About posting suggestions:

- PE Dry Run (at v1.3): You can also post suggestions on how to improve the product. 💡 Be diplomatic when reporting bugs or suggesting improvements. For example, instead of criticising the current behavior, simply suggest alternatives to consider.

- PE (at v1.4): Do not post suggestions. But if a feature is missing a critical functionality that makes the feature less useful to the intended user, it can be reported as a bug.

-

If the product doesn't work at all: If the product fails catastrophically e.g., cannot even launch, you can test the fallback team allocated to you. But in this case you must inform us immediately after the session so that we can send your bug reports to the correct team.

At the end of the project each student is required to submit a Project Portfolio Page.

-

Objective:

- For you to use (e.g. in your resume) as a well-documented data point of your SE experience

- For us to use as a data point to evaluate your,

- contributions to the project

- your documentation skills

-

Sections to include:

-

Overview: A short overview of your product to provide some context to the reader.

-

Summary of Contributions:

- Code contributed: Give a link to your code on Project Code Dashboard, which should be

https://nus-cs2103-ay1819s2.github.io/cs2103-dashboard/#=undefined&search=githbub_username_in_lower_case(replacegithbub_username_in_lower_casewith your actual username in lower case e.g.,johndoe). This link is also available in the Project List Page -- linked to the icon under your photo. - Features implemented: A summary of the features you implemented. If you implemented multiple features, you are recommended to indicate which one is the biggest feature.

- Other contributions:

- Contributions to project management e.g., setting up project tools, managing releases, managing issue tracker etc.

- Evidence of helping others e.g. responses you posted in our forum, bugs you reported in other team's products,

- Evidence of technical leadership e.g. sharing useful information in the forum

- Code contributed: Give a link to your code on Project Code Dashboard, which should be

-

Contributions to the User Guide: Reproduce the parts in the User Guide that you wrote. This can include features you implemented as well as features you propose to implement.

The purpose of allowing you to include proposed features is to provide you more flexibility to show your documentation skills. e.g. you can bring in a proposed feature just to give you an opportunity to use a UML diagram type not used by the actual features. -

Contributions to the Developer Guide: Reproduce the parts in the Developer Guide that you wrote. Ensure there is enough content to evaluate your technical documentation skills and UML modelling skills. You can include descriptions of your design/implementations, possible alternatives, pros and cons of alternatives, etc.

-

If you plan to use the PPP in your Resume, you can also include your SE work outside of the module (will not be graded)

-

-

Format:

-

File name:

docs/team/githbub_username_in_lower_case.adoce.g.,docs/team/johndoe.adoc -

Follow the example in the AddressBook-Level4

-

💡 You can use the Asciidoc's

includefeature to include sections from the developer guide or the user guide in your PPP. Follow the example in the sample. -

It is assumed that all contents in the PPP were written primarily by you. If any section is written by someone else e.g. someone else wrote described the feature in the User Guide but you implemented the feature, clearly state that the section was written by someone else (e.g.

Start of Extract [from: User Guide] written by Jane Doe). Reason: Your writing skills will be evaluated based on the PPP

-

-

Page limit:

Content Limit Overview + Summary of contributions 0.5-1 (soft limit) Contributions to the User Guide 1-3 (soft limit) Contributions to the Developer Guide 3-6 (soft limit) Total 5-10 (strict) - The page limits given above are after converting to PDF format. The actual amount of content you require is actually less than what these numbers suggest because the HTML → PDF conversion adds a lot of spacing around content.

- Reason for page limit: These submissions are peer-graded (in the PE) which needs to be done in a limited time span.

If you have more content than the limit given above, you can give a representative samples of UG and DG that showcase your documentation skills. Those samples should be understandable on their own. For the parts left-out, you can give an abbreviated version and refer the reader to the full UG/DG for more details.

It's similar to giving extra details as appendices; the reader will look at the UG/DG if the PPP is not enough to make a judgment. For example, when judging documentation quality, if the part in the PPP is not well-written, there is no point reading the rest in the main UG/DG. That's why you need to put the most representative part of your writings in the PPP and still give an abbreviated version of the rest in the PPP itself. Even when judging the quantity of work, the reader should be able to get a good sense of the quantity by combining what is quoted in the PPP and your abbreviated description of the missing part. There is no guarantee that the evaluator will read the full document.

After the session:

- We'll transfer the relevant bug reports to your repo over the weekend. Once you have received the bug reports for your product, it is up to you to decide whether you will act on reported issues before the final submission v1.4. For some issues, the correct decision could be to reject or postpone to a version beyond v1.4.

- You can post in the issue thread to communicate with the tester e.g. to ask for more info, etc. However, the tester is not obliged to respond.

- 💡 Do not argue with the issue reporter to try to convince that person that your way is correct/better. If at all, you can gently explain the rationale for the current behavior but do not waste time getting involved in long arguments. If you think the suggestion/bug is unreasonable, just thank the reporter for their view and close the issue.

Deliverable: Practical Exam

Objectives:

- The primary objective of the PE is to increase the rigor of project assessment. Assessing most aspects of the project involves an element subjectivity. As the project counts for 50% of the final grade, it is not prudent to rely on evaluations of tutors alone as there can be significant variations between how different tutors assess projects. That is why we collect more data points via the PE so as to minimize the chance of your project being affected by evaluator-bias.

- Note that none of the significant project grade components are calculated solely based on peer ratings. Rather, PE data are mostly used to cross-validate tutor assessments and identify cases that need further investigation. When peer inputs are used for grading, usually they are combined with tutor evaluations with appropriate weight for each. In some cases ratings from team members are given a higher weight compared to ratings from other teams, if that is appropriate.

- As a bonus, PE also gives us an opportunity to evaluate your manual testing skills, product evaluation skills, effort estimation skills etc.

- Note that the PE is not a means of pitting you against each other. Developers and testers play for the same side; they need to push each other to improve the quality of their work -- not bring down each other.

When, where: Week 13 lecture

Grading:

- Your performance in the practical exam will affect your final grade and your peers, as explained in Admin: Project Assessment section.

- As your submissions can affect the grades of peers, note that we have put in measures to identify insincere/random evaluations and penalize accordingly.

Preparation:

-

Ensure that you can access the relevant issue tracker given below:

-- for PE Dry Run (at v1.3): nus-cs2103-AY1819S2/pe-dry-run

-- for PE (at v1.4): nus-cs2103-AY1819S2/pe (will open only near the actual PE)- These are private repos!. If you cannot access the relevant repo, you may not have accepted the invitation to join the GitHub org used by the module. Go to https://github.com/orgs/nus-cs2103-AY1819S2/invitation to accept the invitation.

- If you cannot find the invitation, post in our forum.

-

Ensure you have access to a computer that is able to run module projects e.g. has the right Java version.

-

Have a good screen grab tool with annotation features so that you can quickly take a screenshot of a bug, annotate it, and post in the issue tracker.

- 💡 You can use Ctrl+V to paste a picture from the clipboard into a text box in GitHub issue tracker.

-

Charge your computer before coming to the PE session. The testing venue may not have enough charging points.

During:

- Take note of your team to test. It will be given to you by the teaching team (distributed via LumiNUS gradebook).

- Download from LumiNUS all files submitted by the team (i.e. jar file, User Guide, Developer Guide, and Project Portfolio Pages) into an empty folder.

- [60 minutes] Test the product and report bugs as described below:

Testing instructions for PE and PE Dry Run

-

What to test:

- PE Dry Run (at v1.3):

- Test the product based on the User Guide (the UG is most likely accessible using the

helpcommand). - Do system testing first i.e., does the product work as specified by the documentation?. If there is time left, you can do acceptance testing as well i.e., does the product solve the problem it claims to solve?.

- Test the product based on the User Guide (the UG is most likely accessible using the

- PE (at v1.4):

- Test based on the Developer Guide (Appendix named Instructions for Manual Testing) and the User Guide. The testing instructions in the Developer Guide can provide you some guidance but if you follow those instructions strictly, you are unlikely to find many bugs. You can deviate from the instructions to probe areas that are more likely to have bugs.

- As before, do both system testing and acceptance testing but give priority to system testing as system testing bugs can earn you more credit.

- PE Dry Run (at v1.3):

-

These are considered bugs:

- Behavior differs from the User Guide

- A legitimate user behavior is not handled e.g. incorrect commands, extra parameters

- Behavior is not specified and differs from normal expectations e.g. error message does not match the error

- The feature does not solve the stated problem of the intended user i.e., the feature is 'incomplete'

- Problems in the User Guide e.g., missing/incorrect info

-

Where to report bugs: Post bug in the following issue trackers (not in the team's repo):

- PE Dry Run (at v1.3): nus-cs2103-AY1819S2/pe-dry-run.

- PE (at v1.4): nus-cs2103-AY1819S2/pe.

-

Bug report format:

- Post bugs as you find them (i.e., do not wait to post all bugs at the end) because the issue tracker will close exactly at the end of the allocated time.

- Do not use team ID in bug reports. Reason: to prevent others copying your bug reports

- Write good quality bug reports; poor quality or incorrect bug reports will not earn credit.

- Use a descriptive title.

- Give a good description of the bug with steps to reproduce and screenshots.

- Assign a severity to the bug report. Bug report without a priority label are considered

severity.Low(lower severity bugs earn lower credit): - Each bug should be a separate issue. The issue will be initialized with one of the following templates:

Bug report

Describe the bug A clear and concise description of what the bug is.

To Reproduce Steps to reproduce the behavior:

- Go to '...'

- Click on '....'

- Scroll down to '....'

- See error

Expected behavior A clear and concise description of what you expected to happen.

Screenshots If applicable, add screenshots to help explain your problem.

Additional context Add any other context about the problem here.

Suggestions for improving the product

Please describe the problem A clear and concise description of what the problem is. Eg. I have to scroll three times to find [...]

Describe the improvement you'd like to suggest A clear and concise description of what you want to happen. Eg., Better to sort based on [...] for quick access

Additional context Add any other context or screenshots about the feature request here.

Bug Severity labels:

severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

-

About posting suggestions:

- PE Dry Run (at v1.3): You can also post suggestions on how to improve the product. 💡 Be diplomatic when reporting bugs or suggesting improvements. For example, instead of criticising the current behavior, simply suggest alternatives to consider.

- PE (at v1.4): Do not post suggestions. But if a feature is missing a critical functionality that makes the feature less useful to the intended user, it can be reported as a bug.

-

If the product doesn't work at all: If the product fails catastrophically e.g., cannot even launch, you can test the fallback team allocated to you. But in this case you must inform us immediately after the session so that we can send your bug reports to the correct team.

- [Remainder of the session] Evaluate the following aspects. Note down your evaluation in a hard copy (as a backup). Submit via TEAMMATES. You are recommended to complete this during the PE session but you have until the end of the day to submit (or revise) your submissions.

- A. Product Design []:

Evaluate the product design based on how the product V2.0 (not V1.4) is described in the User Guide.

-

unable to judge: You are unable to judge this aspect for some reason e.g., UG is not available or does not have enough information. -

Target user:

-

target user specified and appropriate: The target user is clearly specified, prefers typing over other modes of input, and not too general (should be narrowed to a specific user group with certain characteristics). -

value specified and matching: The value offered by the product is clearly specified and matches the target user.

-

-

Value to the target user:

-

value: low: The value to target user is low. App is not worth using. -

value: medium: Some small group of target users might find the app worth using. -

value: high: Most of the target users are likely to find the app worth using.

-

-

Feature-fit:

-

feature-fit: low: Features don't seem to fit together. -

feature-fit: medium: Some features fit together but some don't. -

feature-fit: high: All features fit together.

-

-

polished: The product looks well-designed.

- B. Quality of user docs []:

Evaluate the quality of user documentation based on the parts of the user guide written by the person, as reproduced in the project portfolio. Evaluate from an end-user perspective.

-

UG/ unable to judge: Less than 1 page worth of UG content written by the student or cannot find PPP -

UG/ good use of visuals: Uses visuals e.g., screenshots. -

UG/ good use of examples: Uses examples e.g., sample inputs/outputs. -

UG/ just enough information: Not too much information. All important information is given. -

UG/ easy to understand: The information is easy to understand for the target audience. -

UG/ polished: The document looks neat, well-formatted, and professional.

- C. Quality of developer docs []:

Evaluate the quality of developer documentation based on the developer docs cited/reproduced in the respective project portfolio page. Evaluate from the perspective of a new developer trying to understand how the features are implemented.

-

DG/ unable to judge: Less than 0.5 pages worth of content OR other problems in the document e.g. looks like included wrong content. -

DG/ too little: 0.5 - 1 page of documentation - Diagrams:

-

DG/ types of UML diagrams: 1: Only one type of diagram used (types: Class Diagrams, Object Diagrams, Sequence Diagrams, Activity Diagrams, Use Case Diagrams) -

DG/ types of UML diagrams: 2: Two types of diagrams used -

DG/ types of UML diagrams: 3+: Three or more types of diagrams used -

DG/ UML diagrams suitable: The diagrams used for the right purpose -

DG/ UML notation correct: No more than one minor error in the UML notation -

DG/ diagrams not repetitive: No evidence of repeating the same diagram with minor differences -

DG/ diagrams not too complicated: Diagrams don't cram too much information into them -

DG/ diagrams integrates with text: Diagrams are well integrated into the textual explanations

-

-

DG/ easy to understand: The document is easy to understand/follow -

DG/ just enough information: Not too much information. All important information is given. -

DG/ polished: The document looks neat, well-formatted, and professional.

- D. Feature Quality []:

Evaluate the biggest feature done by the student for difficulty, completeness, and testability. Note: examples given below assume that AB4 did not have the commands edit, undo, and redo.

- Difficulty

-

Feature/ difficulty: unable to judge: You are unable to judge this aspect for some reason. -

Feature/ difficulty: low: e.g. make the existing find command case insensitive. -

Feature/ difficulty: medium: e.g. an edit command that requires the user to type all fields, even the ones that are not being edited. -

Feature/ difficulty: high: e.g., undo/redo command

-

- Completeness

-

Feature/ completeness: unable to judge: You are unable to judge this aspect for some reason. -

Feature/ completeness: low: A partial implementation of the feature. Barely useful. -

Feature/ completeness: medium: The feature has enough functionality to be useful for some of the users. -

Feature/ completeness: high: The feature has all functionality to be useful to almost all users.

-

- Other

-

Feature/ not hard to test: The feature was not too hard to test manually. -

Feature/ polished: The feature looks polished (as if done by a professional programmer).

-

- E. Amount of work []:

Evaluate the amount of work, on a scale of 0 to 30.

- Consider this PR (

historycommand) as 5 units of effort which means this PR (undo/redocommand) is about 15 points of effort. Given that 30 points matches an effort twice as that needed for theundo/redofeature (which was given as an example of anAgrade project), we expect most students to be have efforts lower than 20. - Count all implementation/testing/documentation work as mentioned in that person's PPP. Also look at the actual code written by the person.

- Do not give a high value just to be nice. You will be asked to provide a brief justification for your effort estimates.

Processing PE Bug Reports:

There will be a review period for you to respond to the bug reports you received.

Duration: The review period will start around 1 day after the PE (exact time to be announced) and will last until the following Monday midnight. However, you are recommended to finish this task ASAP, to minimize cutting into your exam preparation work.

Bug reviewing is recommended to be done as a team as some of the decisions need team consensus.

Instructions for Reviewing Bug Reports

-

First, don't freak out if there are lot of bug reports. Many can be duplicates and some can be false positives. In any case, we anticipate that all of these products will have some bugs and our penalty for bugs is not harsh. Furthermore, it depends on the severity of the bug. Some bug may not even be penalized.

-

Do not edit the subject or the description. Your response (if any) should be added as a comment.

-

You may (but not required to) close the bug report after you are done processing it, as a convenient means of separating the 'processed' issues from 'not yet processed' issues.

-

If the bug is reported multiple times, mark all copies EXCEPT one as duplicates using the

duplicatetag (if the duplicates have different severity levels, you should keep the one with the highest severity). In addition, use this technique to indicate which issue they are duplicates of. Duplicates can be omitted from processing steps given below. -

If a bug seems to be for a different product (i.e. wrongly assigned to your team), let us know (email prof).

-

Decide if it is a real bug and apply ONLY one of these labels.

Response Labels:

response.Accepted: You accept it as a bug.response.NotInScope: It is a valid issue but not something the team should be penalized for e.g., it was not related to features delivered in v1.4.response.Rejected: What tester treated as a bug is in fact the expected behavior. You can reject bugs that you inherited from AB4.response.CannotReproduce: You are unable to reproduce the behavior reported in the bug after multiple tries.response.IssueUnclear: The issue description is not clear. Don't post comments asked the tester to give more info. The tester will not be able to see those comments because the bug reports are anonymized.

- If applicable, decide the type of bug. Bugs without

type.*are consideredtype.FunctionalityBugby default (which are liable to a heavier penalty).

Bug Type Labels:

type.FeatureFlaw: some functionality missing from a feature delivered in v1.4 in a way that the feature becomes less useful to the intended target user for normal usage. i.e., the feature is not 'complete'. In other words, an acceptance testing bug that falls within the scope of v1.4 features. These issues are counted against the 'depth and completeness' of the feature.type.FunctionalityBug: the bug is a flaw in how the product works.type.DocTypo: A minor spelling/grammar error in the documentation. Does not affect the user.type.DocumentationBug: A flaw in the documentation that can potentially affect the user e.g., a missing step, a wrong instruction

- If you disagree with the original severity assigned to the bug, you may change it to the correct level.

For all cases of downgrading severity or non-acceptance of a bug, you must add a comment justifying your stance. All such cases will be double-checked by the teaching team and indiscriminate downgrading/non-acceptance of bugs without a good justification, if deemed as a case of trying to game the system, may be penalized as it is unfair to the tester.

Bug Severity labels:

severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

-

Decide who should fix the bug. Use the

Assigneesfield to assign the issue to that person(s). There is no need to actually fix the bug though. It's simply an indication/acceptance of responsibility. If there is no assignee, we will distribute the penalty for that bug (if any) among all team members. -

We recommend (but not enforce) that the feature owner should be assigned bugs related to the feature, even if the bug was caused indirectly by someone else. Reason: The feature owner should have defended the feature against bugs using automated tests and defensive coding techniques.

-

Add an explanatory comment explaining your choice of labels and assignees.

-

We recommend choosing

type.*,severity.*and assignee even for bugs you are not accepting. Reason: your non-acceptance may be rejected by the tutor later, in which case we need to grade it as an accepted bug.

Notes for Those Using AB-2 or AB-3 for the Project

There is no explicit penalty for switching to a lower level AB. All projects are evaluated based on the same yardstick irrespective of on which AB it is based. As an AB is given to you as a 'free' head-start, a lower level AB gives you a shorter head-start, which means your final product is likely to be less functional than those from teams using AB-4 unless you progress faster than them. Nevertheless, you should switch to AB2/3 if you feel you can learn more from the project that way, as our goal is to maximize learning, not features.

If your team wants to stay with AB-4 but you want to switch to a lower level AB, let the us know so that we can work something out for you.

If you have opted to use AB-2 or AB-3 instead of AB-4 as the basis of your product, please note the following points:

- Set up auto-publishing of documentation similar to AB-4

- Add Project Portfolio Pages (PPP) for members, similar to the example provided in AB-4

- You can convert UG, DG, and PPP into pdf files using instructions provided in AB-4 DG

- Create an About Us page similar to AB-4 and update it as described in

mid-v1.1 progress guide

Set up project repo, start moving UG and DG to the repo, attempt to do local-impact changes to the code base.

Project Management:

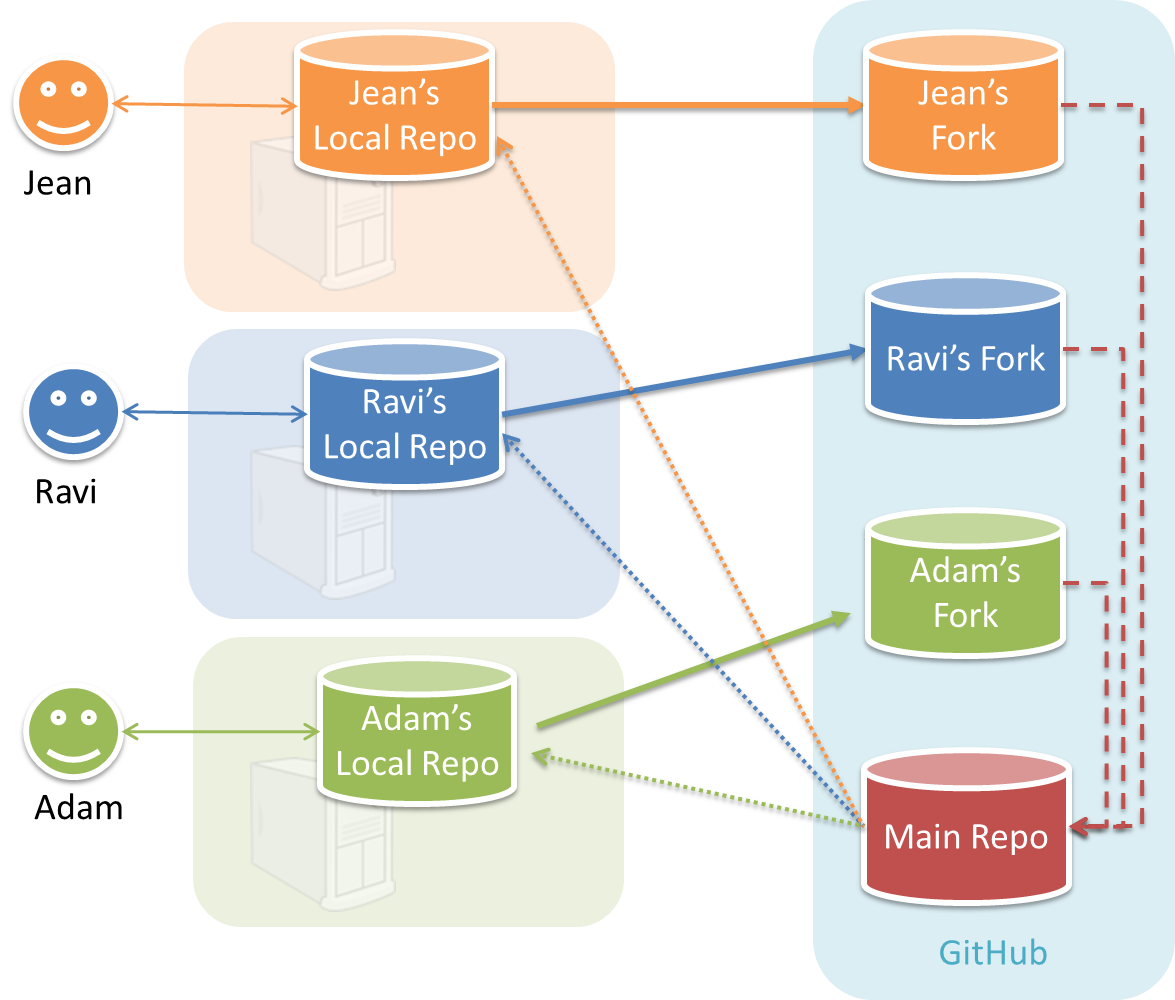

Set up the team org and the team repo as explained below:

Relevant: [

Organization setup

Please follow the organization/repo name format precisely because we use scripts to download your code or else our scripts will not be able to detect your work.

After receiving your team ID, one team member should do the following steps:

- Create a GitHub organization with the following details:

- Organization name :

CS2103-AY1819S2-TEAM_ID. e.g.CS2103-AY1819S2-W12-1 - Plan: Open Source ($0/month)

- Organization name :

- Add members to the organization:

- Create a team called

developersto your organization. - Add your team members to the developers team.

- Create a team called

Relevant: [

Repo setup

Only one team member:

- Fork Address Book Level 4 to your team org.

- Rename the forked repo as

main. This repo (let's call it the team repo) is to be used as the repo for your project. - Ensure the issue tracker of your team repo is enabled. Reason: our bots will be posting your weekly progress reports on the issue tracker of your team repo.

- Ensure your team members have the desired level of access to your team repo.

- Enable Travis CI for the team repo.

- Set up auto-publishing of docs. When set up correctly, your project website should be available via the URL

https://cs2103-ay1819s2-{team-id}.github.io/maine.g.,https://cs2103-ay1819s2-w13-1.github.io/main/. This also requires you to enable the GitHub Pages feature of your team repo and configure it to serve the website from thegh-pagesbranch. - Create a team PR for us to track your project progress: i.e., create a PR from your team repo

masterbranch to [nus-cs2103-AY1819S2/addressbook-level4]masterbranch. PR name:[Team ID] Product Namee.g.,[T09-2] Contact List Pro. As you merge code to your team repo'smasterbranch, this PR will auto-update to reflect how much your team's product has progressed. In the PR description@mention the other team members so that they get notified when the tutor adds comments to the PR.

All team members:

- Watch the

mainrepo (created above) i.e., go to the repo and click on thewatchbutton to subscribe to activities of the repo - Fork the

mainrepo to your personal GitHub account. - Clone the fork to your Computer.

- Recommended: Set it up as an Intellij project (follow the instructions in the Developer Guide carefully).

- Set up the developer environment in your computer. You are recommended to use JDK 9 for AB-4 as some of the libraries used in AB-4 have not updated to support Java 10 yet. JDK 9 can be downloaded from the Java Archive.

Note that some of our download scripts depend on the following folder paths. Please do not alter those paths in your project.

/src/main/src/test/docs

When updating code in the repo, follow the workflow explained below:

Relevant: [

Workflow

Before you do any coding for the project,

- Ensure you have

set the Git username correctly (as explained in Appendix E) in all Computers you use for coding. - Read

our reuse policy (in Admin: Appendix B) , in particular, how to give credit when you reuse code from the Internet or classmates:

Setting Git Username to Match GitHub Username

We use various tools to analyze your code. For us to be able to identify your commits, you should use the GitHub username as your Git username as well. If there is a mismatch, or if you use multiple user names for Git, our tools might miss some of your work and as a result you might not get credit for some of your work.

In each Computer you use for coding, after installing Git, you should set the Git username as follows.

- Open a command window that can run Git commands (e.g., Git bash window)

- Run the command

git config --global user.name YOUR_GITHUB_USERNAME

e.g.,git config --global user.name JohnDoe

More info about setting Git username is here.

Policy on reuse

Reuse is encouraged. However, note that reuse has its own costs (such as the learning curve, additional complexity, usage restrictions, and unknown bugs). Furthermore, you will not be given credit for work done by others. Rather, you will be given credit for using work done by others.

- You are allowed to reuse work from your classmates, subject to following conditions:

- The work has been published by us or the authors.

- You clearly give credit to the original author(s).

- You are allowed to reuse work from external sources, subject to following conditions:

- The work comes from a source of 'good standing' (such as an established open source project). This means you cannot reuse code written by an outside 'friend'.

- You clearly give credit to the original author. Acknowledge use of third party resources clearly e.g. in the welcome message, splash screen (if any) or under the 'about' menu. If you are open about reuse, you are less likely to get into trouble if you unintentionally reused something copyrighted.

- You do not violate the license under which the work has been released. Please do not use 3rd-party images/audio in your software unless they have been specifically released to be used freely. Just because you found it in the Internet does not mean it is free for reuse.

- Always get permission from us before you reuse third-party libraries. Please post your 'request to use 3rd party library' in our forum. That way, the whole class get to see what libraries are being used by others.

Giving credit for reused work

Given below are how to give credit for things you reuse from elsewhere. These requirements are specific to this module i.e., not applicable outside the module (outside the module you should follow the rules specified by your employer and the license of the reused work)

If you used a third party library:

- Mention in the

README.adoc(under the Acknowledgements section) - mention in the

Project Portfolio Page if the library has a significant relevance to the features you implemented

If you reused code snippets found on the Internet e.g. from StackOverflow answers or

referred code in another software or

referred project code by current/past student:

- If you read the code to understand the approach and implemented it yourself, mention it as a comment

Example://Solution below adapted from https://stackoverflow.com/a/16252290 {Your implmentation of the reused solution here ...} - If you copy-pasted a non-trivial code block (possibly with minor modifications renaming, layout changes, changes to comments, etc.), also mark the code block as reused code (using

@@authortags

Format://@@author {yourGithubUsername}-reused //{Info about the source...} {Reused code (possibly with minor modifications) here ...} //@@authorpersons = getList() //@@author johndoe-reused //Reused from https://stackoverflow.com/a/34646172 with minor modifications Collections.sort(persons, new Comparator<CustomData>() { @Override public int compare(CustomData lhs, CustomData rhs) { return lhs.customInt > rhs.customInt ? -1 : (lhs.customInt < rhs.customInt) ? 1 : 0; } }); //@@author return persons;

Adding @@author tags indicate authorship

-

Mark your code with a

//@@author {yourGithubUsername}. Note the double@.

The//@@authortag should indicates the beginning of the code you wrote. The code up to the next//@@authortag or the end of the file (whichever comes first) will be considered as was written by that author. Here is a sample code file://@@author johndoe method 1 ... method 2 ... //@@author sarahkhoo method 3 ... //@@author johndoe method 4 ... -

If you don't know who wrote the code segment below yours, you may put an empty

//@@author(i.e. no GitHub username) to indicate the end of the code segment you wrote. The author of code below yours can add the GitHub username to the empty tag later. Here is a sample code with an emptyauthortag:method 0 ... //@@author johndoe method 1 ... method 2 ... //@@author method 3 ... method 4 ... -

The author tag syntax varies based on file type e.g. for java, css, fxml. Use the corresponding comment syntax for non-Java files.

Here is an example code from an xml/fxml file.<!-- @@author sereneWong --> <textbox> <label>...</label> <input>...</input> </textbox> ... -

Do not put the

//@@authorinside java header comments.

👎/** * Returns true if ... * @@author johndoe */👍

//@@author johndoe /** * Returns true if ... */

What to and what not to annotate

-

Annotate both functional and test code There is no need to annotate documentation files.

-

Annotate only significant size code blocks that can be reviewed on its own e.g., a class, a sequence of methods, a method.

Claiming credit for code blocks smaller than a method is discouraged but allowed. If you do, do it sparingly and only claim meaningful blocks of code such as a block of statements, a loop, or an if-else statement.- If an enhancement required you to do tiny changes in many places, there is no need to annotate all those tiny changes; you can describe those changes in the Project Portfolio page instead.

- If a code block was touched by more than one person, either let the person who wrote most of it (e.g. more than 80%) take credit for the entire block, or leave it as 'unclaimed' (i.e., no author tags).

- Related to the above point, if you claim a code block as your own, more than 80% of the code in that block should have been written by yourself. For example, no more than 20% of it can be code you reused from somewhere.

- 💡 GitHub has a blame feature and a history feature that can help you determine who wrote a piece of code.

-

Do not try to boost the quantity of your contribution using unethical means such as duplicating the same code in multiple places. In particular, do not copy-paste test cases to create redundant tests. Even repetitive code blocks within test methods should be extracted out as utility methods to reduce code duplication. Individual members are responsible for making sure code attributed to them are correct. If you notice a team member claiming credit for code that he/she did not write or use other questionable tactics, you can email us (after the final submission) to let us know.

-

If you wrote a significant amount of code that was not used in the final product,

- Create a folder called

{project root}/unused - Move unused files (or copies of files containing unused code) to that folder

- use

//@@author {yourGithubUsername}-unusedto mark unused code in those files (note the suffixunused) e.g.

//@@author johndoe-unused method 1 ... method 2 ...Please put a comment in the code to explain why it was not used.

- Create a folder called

-

If you reused code from elsewhere, mark such code as

//@@author {yourGithubUsername}-reused(note the suffixreused) e.g.//@@author johndoe-reused method 1 ... method 2 ... -

You can use empty

@@authortags to mark code as not yours when RepoSense attribute the to you incorrectly.-

Code generated by the IDE/framework, should not be annotated as your own.

-

Code you modified in minor ways e.g. adding a parameter. These should not be claimed as yours but you can mention these additional contributions in the Project Portfolio page if you want to claim credit for them.

-

At the end of the project each student is required to submit a Project Portfolio Page.

-

Objective:

- For you to use (e.g. in your resume) as a well-documented data point of your SE experience

- For us to use as a data point to evaluate your,

- contributions to the project

- your documentation skills

-

Sections to include:

-

Overview: A short overview of your product to provide some context to the reader.

-

Summary of Contributions:

- Code contributed: Give a link to your code on Project Code Dashboard, which should be

https://nus-cs2103-ay1819s2.github.io/cs2103-dashboard/#=undefined&search=githbub_username_in_lower_case(replacegithbub_username_in_lower_casewith your actual username in lower case e.g.,johndoe). This link is also available in the Project List Page -- linked to the icon under your photo. - Features implemented: A summary of the features you implemented. If you implemented multiple features, you are recommended to indicate which one is the biggest feature.

- Other contributions:

- Contributions to project management e.g., setting up project tools, managing releases, managing issue tracker etc.

- Evidence of helping others e.g. responses you posted in our forum, bugs you reported in other team's products,

- Evidence of technical leadership e.g. sharing useful information in the forum

- Code contributed: Give a link to your code on Project Code Dashboard, which should be

-

Contributions to the User Guide: Reproduce the parts in the User Guide that you wrote. This can include features you implemented as well as features you propose to implement.

The purpose of allowing you to include proposed features is to provide you more flexibility to show your documentation skills. e.g. you can bring in a proposed feature just to give you an opportunity to use a UML diagram type not used by the actual features. -

Contributions to the Developer Guide: Reproduce the parts in the Developer Guide that you wrote. Ensure there is enough content to evaluate your technical documentation skills and UML modelling skills. You can include descriptions of your design/implementations, possible alternatives, pros and cons of alternatives, etc.

-

If you plan to use the PPP in your Resume, you can also include your SE work outside of the module (will not be graded)

-

-

Format:

-

File name:

docs/team/githbub_username_in_lower_case.adoce.g.,docs/team/johndoe.adoc -

Follow the example in the AddressBook-Level4

-

💡 You can use the Asciidoc's

includefeature to include sections from the developer guide or the user guide in your PPP. Follow the example in the sample. -

It is assumed that all contents in the PPP were written primarily by you. If any section is written by someone else e.g. someone else wrote described the feature in the User Guide but you implemented the feature, clearly state that the section was written by someone else (e.g.

Start of Extract [from: User Guide] written by Jane Doe). Reason: Your writing skills will be evaluated based on the PPP

-

-

Page limit: