Relevant: [

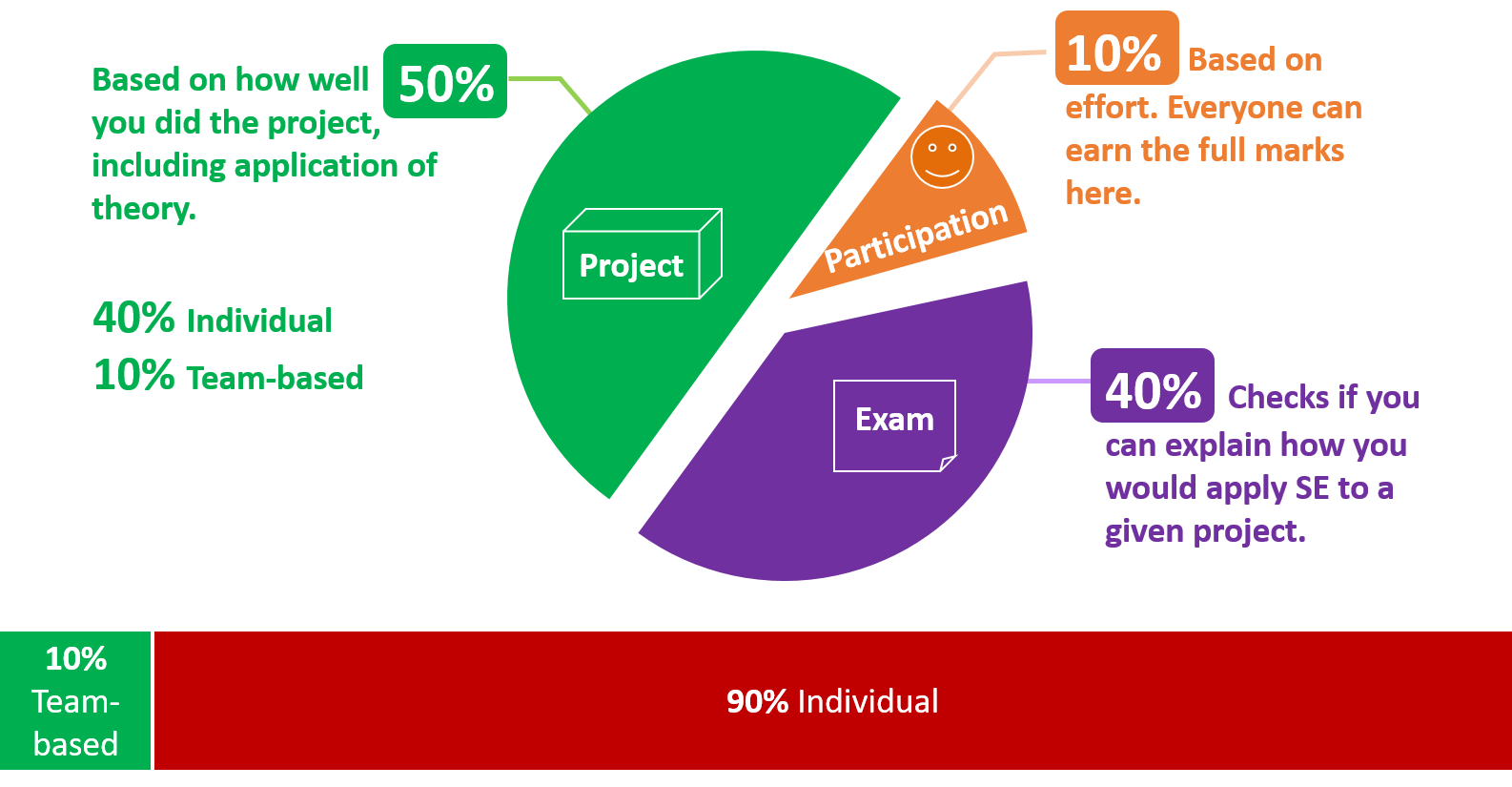

To receive full 10 marks allocated for participation, earn at least 15 participation points.

There are 30+ available points to choose from:

-

Good peer ratings - Criteria for professional conduct (1 point for each criterion, max 7)

- Competency criteria (2 points for each, max 6)

Only those who submit peer evaluations can earn participation points from peer evaluations they receive.

- In-lecture quizzes (1 each, max 10 points)

- Module admin tasks done on time and as instructed

- Peer evaluations (1 points each, max 3)

- Pre-module survey (1 points)

- Enhanced AB1-AB3 (2 points each, max 6 points)

Relevant: [

Peer evaluation criteria: professional conduct

- Professional Communication :

- Communicates sufficiently and professionally. e.g. Does not use offensive language or excessive slang in project communications.

- Responds to communication from team members in a timely manner (e.g. within 24 hours).

- Punctuality: Does not cause others to waste time or slow down project progress by frequent tardiness.

- Dependability: Promises what can be done, and delivers what was promised.

- Effort: Puts in sufficient effort to, and tries their best to keep up with the module/project pace. Seeks help from others when necessary.

- Quality: Does not deliver work products that seem to be below the student's competence level i.e. tries their best to make the work product as high quality as possible within her competency level.

- Meticulousness:

- Rarely overlooks submission requirements.

- Rarely misses compulsory module activities such as pre-module survey.

- Teamwork: How willing are you to act as part of a team, contribute to team-level tasks, adhere to team decisions, etc.

Peer evaluation criteria: competency

- Technical Competency: Able to gain competency in all the required tools and techniques.

- Mentoring skills: Helps others when possible. Able to mentor others well.

- Communication skills: Able to communicate (written and spoken) well. Takes initiative in discussions.

Relevant: [

There is no midterm.

The final exam has two parts:

- Part 1: MCQ questions (1 hour, 20 marks)

- Part 2: Essay questions (1 hour, 20 marks)

Both papers will be given to you at the start but you need to answer Part 1 first (i.e. MCQ paper). It will be collected 1 hour after the exam start time (even if arrived late for the exam). You are free to start part 2 early if you finish Part 1 early.

Final Exam: Part 1 (MCQ)

Each MCQ question gives you a statement to evaluate.

An example statement

Testing is a Q&A activity

Unless stated otherwise, the meaning of answer options are

A: Agree. If the question has multiple statements, agree with all of them.

B: Disagree. If the question has multiple statements, disagree with at least one of them

C, D, E: Not used

Number of questions: 100

Note that you have slightly more than ½ minute for each question, which means you need to go through the questions fairly quickly.

Given the fast pace required by the paper, to be fair to all students, you will not be allowed to clarify doubts about questions (in Part 1) by talking to invigilators.

- If a question is not clear, you can circle the question number in the question paper and write your doubt in the question paper, near that question.

- If your doubt is justified (e.g. there is a typo in the question) or if many students found the question to be unclear, the examiner may decide to omit that question from grading.

Questions in Part 1 are confidential. You are not allowed to reveal Part 1 content to anyone after the exam. All pages of the assessment paper are to be returned at the end of the exam.

You will be given OCR forms (i.e., bubble sheets) to indicate your answers for Part 1. As each OCR form can accommodate only 50 answers, you will be given 2 OCR forms. Indicate your student number in both OCR forms.

To save space, we use the following notation in MCQ question. [x | y | z] means ‘x and z, but not y’

SE is [boring | useful | fun] means SE is not boring AND SE is useful AND SE is fun.

Consider the following statement:

- IDEs can help with [writing | debugging | testing] code.

The correct response for it is Disagree because IDEs can help with all three of the given options, not just writing and testing.

Some questions will use underlines or highlighting to draw your attention to a specific part of the question. That is because those parts are highly relevant to the answer and we don’t want you to miss the relevance of that part.

Consider the statement below:

Technique ABC can be used to generate more test cases.

The word can is underlined because the decision you need to make is whether the ABC can or cannot be used to generate more test cases; the decision is not whether ABC can be used to generate more or better test cases.

Markers such as the one given below appears at left margin of the paper to indicate where the question corresponds to a new column in the OCR form. E.g. questions 11, 21, 31, etc. (a column has 10 questions). Such markers can help you to detect if you missed a question in the previous 10 questions. You can safely ignore those markers if you are not interested in making use of that additional hint.

Some questions have tags e.g., the question below has a tag JAVA. These tags provide additional context about the question. In the example below, the tag indicates that the code given in the question is Java code.

The exam paper is open-book: you may bring any printed or written materials to the exam in hard copy format. However, given the fast pace required by Part 1, you will not have time left to refer notes during that part of the exam.

💡 Mark the OCR form as you go, rather than planning to transfer your answers to the OCR form near the end. Reason: Given there are 100 questions, it will be hard to estimate how much time you need to mass-transfer all answers to OCR forms.

💡 Write the answer in the exam paper as well when marking it in the OCR form. Reason: It will reduce the chance of missing a question. Furthermore, in case you missed a question, it will help you correct the OCR form quickly.

💡 We have tried to avoid deliberately misleading/tricky questions. If a question seems to take a very long time to figure out, you are probably over-thinking it.

You will be given a practice exam paper to familiarize yourself with this slightly unusual exam format.

Final Exam: Part 2 (Essay)

Unlike in part 1, you can ask invigilators for clarifications if you found a question to be unclear in part 2.

Yes, you may use pencils when answering part 2.

Relevant: [

Note that project grading is not competitive (not bell curved). CS2103T projects will be assessed separately from CS2103 projects. This is to account for the perceived difference in workload. Given below is the marking scheme.

Total: 50 marks ( 38 individual marks + 12 team marks)

See the sections below for details of how we assess each aspect.

1. Project Assessment: Product Design [ 5 marks]

Evaluates: how well your features fit together to form a cohesive product (not how many features or how big the features are) and how well does it match the target user

Evaluated by:

- tutors (based on product demo and user guide)

- peers from other teams (based on peer testing and user guide)

For reference, here are some grading instructions that will be given to peers/tutors grading this aspect:

Evaluate the product design based on how the product V2.0 (not V1.4) is described in the User Guide.

unable to judge: You are unable to judge this aspect for some reason e.g., UG is not available or does not have enough information.Target user:

target user specified and appropriate: The target user is clearly specified, prefers typing over other modes of input, and not too general (should be narrowed to a specific user group with certain characteristics).value specified and matching: The value offered by the product is clearly specified and matches the target user.Value to the target user:

value: low: The value to target user is low. App is not worth using.value: medium: Some small group of target users might find the app worth using.value: high: Most of the target users are likely to find the app worth using.Feature-fit:

feature-fit: low: Features don't seem to fit together.feature-fit: medium: Some features fit together but some don't.feature-fit: high: All features fit together.

polished: The product looks well-designed.

2. Project Assessment: Implementation [ 13 marks]

2A. Code quality

Evaluates: the quality of the code you have written yourself

Based on: the parts of the code you claim as written by you

Evaluation method:: manual inspection by tutors + automated-analysis by a script

For reference, here are some factors considered when grading this aspect:

At least some evidence of these (see here for more info)

- logging

- exceptions

- assertions

- defensive coding

No coding standard violations e.g. all boolean variables/methods sounds like booleans. Checkstyle can prevent only some coding standard violations; others need to be checked manually.

SLAP is applied at a reasonable level. Long methods or deeply-nested code are symptoms of low-SLAP.

No noticeable code duplications i.e. if there multiple blocks of code that vary only in minor ways, try to extract out similarities into one place, especially in test code.

Evidence of applying

code quality guidelines covered in the module .

Code Quality

Can explain the importance of code quality

Always code as if the person who ends up maintaining your code will be a violent psychopath who knows where you live. -- Martin Golding

Can explain the importance of readability

Programs should be written and polished until they acquire publication quality. --Niklaus Wirth

Among various dimensions of code quality, such as run-time efficiency, security, and robustness, one of the most important is understandability. This is because in any non-trivial software project, code needs to be read, understood, and modified by other developers later on. Even if we do not intend to pass the code to someone else, code quality is still important because we all become 'strangers' to our own code someday.

The two code samples given below achieve the same functionality, but one is easier to read.

|

Bad |

|

Good |

|

Bad |

|

Good |

Can improve code quality using technique: avoid long methods

Be wary when a method is longer than the computer screen, and take corrective action when it goes beyond 30 LOC (lines of code). The bigger the haystack, the harder it is to find a needle.

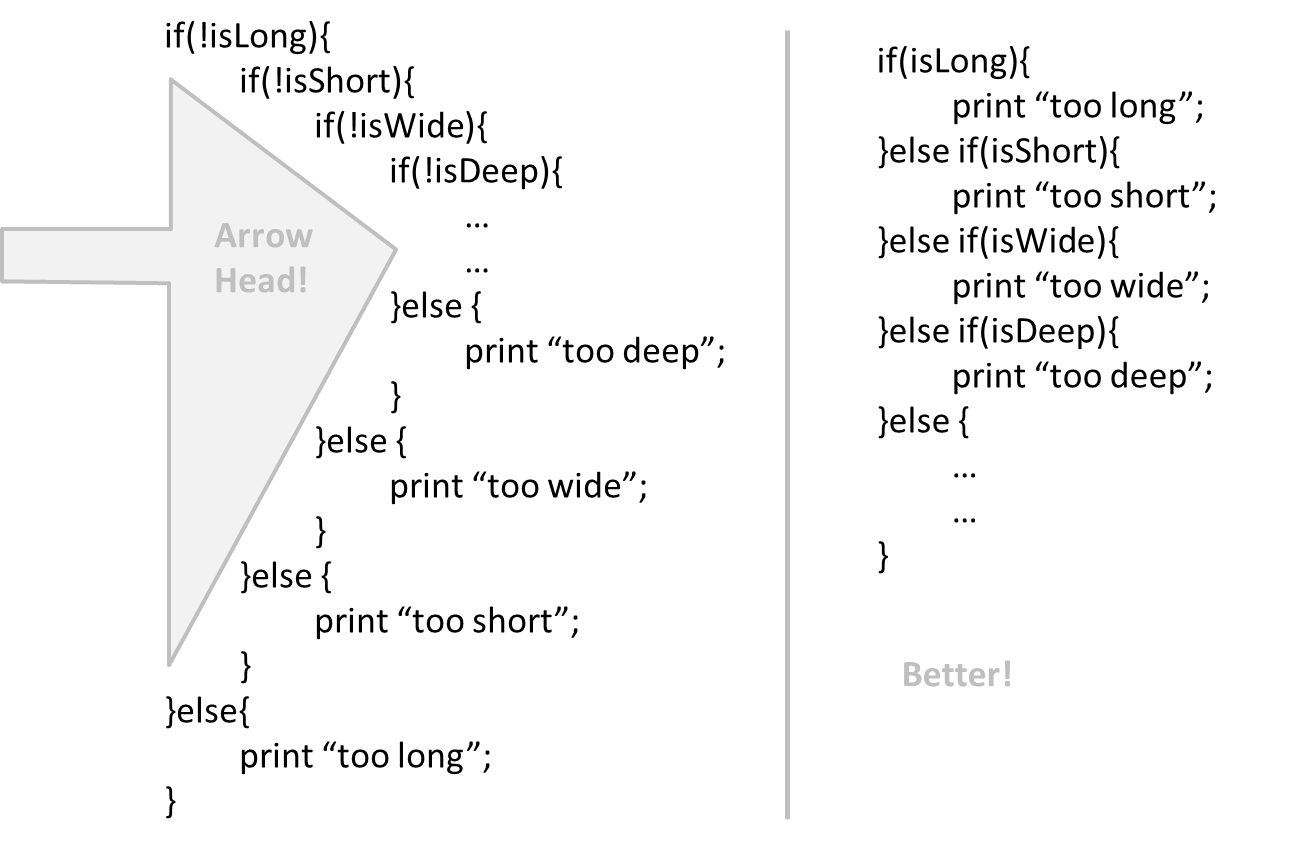

Can improve code quality using technique: avoid deep nesting

If you need more than 3 levels of indentation, you're screwed anyway, and should fix your program. --Linux 1.3.53 CodingStyle

In particular, avoid arrowhead style code.

Example:

Can improve code quality using technique: avoid complicated expressions

Avoid complicated expressions, especially those having many negations and nested parentheses. If you must evaluate complicated expressions, have it done in steps (i.e. calculate some intermediate values first and use them to calculate the final value).

Example:

Bad

return ((length < MAX_LENGTH) || (previousSize != length)) && (typeCode == URGENT);

Good

boolean isWithinSizeLimit = length < MAX_LENGTH;

boolean isSameSize = previousSize != length;

boolean isValidCode = isWithinSizeLimit || isSameSize;

boolean isUrgent = typeCode == URGENT;

return isValidCode && isUrgent;

Example:

Bad

return ((length < MAX_LENGTH) or (previous_size != length)) and (type_code == URGENT)

Good

is_within_size_limit = length < MAX_LENGTH

is_same_size = previous_size != length

is_valid_code = is_within_size_limit or is_same_size

is_urgent = type_code == URGENT

return is_valid_code and is_urgent

The competent programmer is fully aware of the strictly limited size of his own skull; therefore he approaches the programming task in full humility, and among other things he avoids clever tricks like the plague. -- Edsger Dijkstra

Can improve code quality using technique: avoid magic numbers

When the code has a number that does not explain the meaning of the number, we call that a magic number (as in “the number appears as if by magic”). Using a

Example:

|

Bad |

|

Good |

Note: Python does not have a way to make a variable a constant. However, you can use a normal variable with an ALL_CAPS name to simulate a constant.

|

Bad |

|

Good |

Similarly, we can have ‘magic’ values of other data types.

Bad

"Error 1432" // A magic string!

Can improve code quality using technique: make the code obvious

Make the code as explicit as possible, even if the language syntax allows them to be implicit. Here are some examples:

- [

Java] Use explicit type conversion instead of implicit type conversion. - [

Java,Python] Use parentheses/braces to show grouping even when they can be skipped. - [

Java,Python] Useenumerations when a certain variable can take only a small number of finite values. For example, instead of declaring the variable 'state' as an integer and using values 0,1,2 to denote the states 'starting', 'enabled', and 'disabled' respectively, declare 'state' as typeSystemStateand define an enumerationSystemStatethat has values'STARTING','ENABLED', and'DISABLED'.

Can improve code quality using technique: structure code logically

Lay out the code so that it adheres to the logical structure. The code should read like a story. Just like we use section breaks, chapters and paragraphs to organize a story, use classes, methods, indentation and line spacing in your code to group related segments of the code. For example, you can use blank lines to group related statements together. Sometimes, the correctness of your code does not depend on the order in which you perform certain intermediary steps. Nevertheless, this order may affect the clarity of the story you are trying to tell. Choose the order that makes the story most readable.

Can improve code quality using technique: do not 'trip up' reader

Avoid things that would make the reader go ‘huh?’, such as,

- unused parameters in the method signature

- similar things look different

- different things that look similar

- multiple statements in the same line

- data flow anomalies such as, pre-assigning values to variables and modifying it without any use of the pre-assigned value

Can improve code quality using technique: practice kissing

As the old adage goes, "keep it simple, stupid” (KISS). Do not try to write ‘clever’ code. For example, do not dismiss the brute-force yet simple solution in favor of a complicated one because of some ‘supposed benefits’ such as 'better reusability' unless you have a strong justification.

Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it. --Brian W. Kernighan

Programs must be written for people to read, and only incidentally for machines to execute. --Abelson and Sussman

Can improve code quality using technique: avoid premature optimizations

Optimizing code prematurely has several drawbacks:

- We may not know which parts are the real performance bottlenecks. This is especially the case when the code undergoes transformations (e.g. compiling, minifying, transpiling, etc.) before it becomes an executable. Ideally, you should use a profiler tool to identify the actual bottlenecks of the code first, and optimize only those parts.

- Optimizing can complicate the code, affecting correctness and understandability

- Hand-optimized code can be harder for the compiler to optimize (the simpler the code, the easier for the compiler to optimize it). In many cases a compiler can do a better job of optimizing the runtime code if you don't get in the way by trying to hand-optimize the source code.

A popular saying in the industry is make it work, make it right, make it fast which means in most cases getting the code to perform correctly should take priority over optimizing it. If the code doesn't work correctly, it has no value on matter how fast/efficient it it.

Premature optimization is the root of all evil in programming. --Donald Knuth

Note that there are cases where optimizing takes priority over other things e.g. when writing code for resource-constrained environments. This guideline simply a caution that you should optimize only when it is really needed.

Can improve code quality using technique: SLAP hard

Avoid varying the level of

Example:

Bad

readData();

salary = basic*rise+1000;

tax = (taxable?salary*0.07:0);

displayResult();

Good

readData();

processData();

displayResult();

Design → Design Fundamentals → Abstraction →

Abstraction is a technique for dealing with complexity. It works by establishing a level of complexity we are interested in, and suppressing the more complex details below that level.

The guiding principle of abstraction is that only details that are relevant to the current perspective or the task at hand needs to be considered. As most programs are written to solve complex problems involving large amounts of intricate details, it is impossible to deal with all these details at the same time. That is where abstraction can help.

Ignoring lower level data items and thinking in terms of bigger entities is called data abstraction.

Within a certain software component, we might deal with a user data type, while ignoring the details contained in the user data item such as name, and date of birth. These details have been ‘abstracted away’ as they do not affect the task of that software component.

Control abstraction abstracts away details of the actual control flow to focus on tasks at a simplified level.

print(“Hello”) is an abstraction of the actual output mechanism within the computer.

Abstraction can be applied repeatedly to obtain progressively higher levels of abstractions.

An example of different levels of data abstraction: a File is a data item that is at a higher level than an array and an array is at a higher level than a bit.

An example of different levels of control abstraction: execute(Game) is at a higher level than print(Char) which is at a higher than an Assembly language instruction MOV.

Abstraction is a general concept that is not limited to just data or control abstractions.

Some more general examples of abstraction:

- An OOP class is an abstraction over related data and behaviors.

- An architecture is a higher-level abstraction of the design of a software.

- Models (e.g., UML models) are abstractions of some aspect of reality.

Can improve code quality using technique: make the happy path prominent

The happy path (i.e. the execution path taken when everything goes well) should be clear and prominent in your code. Restructure the code to make the happy path unindented as much as possible. It is the ‘unusual’ cases that should be indented. Someone reading the code should not get distracted by alternative paths taken when error conditions happen. One technique that could help in this regard is the use of guard clauses.

Example:

Bad

if (!isUnusualCase) { //detecting an unusual condition

if (!isErrorCase) {

start(); //main path

process();

cleanup();

exit();

} else {

handleError();

}

} else {

handleUnusualCase(); //handling that unusual condition

}

In the code above,

- Unusual condition detection is separated from their handling.

- Main path is nested deeply.

Good

if (isUnusualCase) { //Guard Clause

handleUnusualCase();

return;

}

if (isErrorCase) { //Guard Clause

handleError();

return;

}

start();

process();

cleanup();

exit();

In contrast, the above code

- deals with unusual conditions as soon as they are detected so that the reader doesn't have to remember them for long.

- keeps the main path un-indented.

Can explain the need for following a standard

One essential way to improve code quality is to follow a consistent style. That is why software engineers follow a strict coding standard (aka style guide).

The aim of a coding standard is to make the entire code base look like it was written by one person. A coding standard is usually specific to a programming language and specifies guidelines such as the location of opening and closing braces, indentation styles and naming styles (e.g. whether to use Hungarian style, Pascal casing, Camel casing, etc.). It is important that the whole team/company use the same coding standard and that standard is not generally inconsistent with typical industry practices. If a company's coding standards is very different from what is used typically in the industry, new recruits will take longer to get used to the company's coding style.

💡 IDEs can help to enforce some parts of a coding standard e.g. indentation rules.

What is the recommended approach regarding coding standards?

c

What is the aim of using a coding standard? How does it help?

Can follow simple mechanical style rules

Learn basic guidelines of the Java coding standard (by OSS-Generic)

Sample coding standard: PEP 8 Python Style Guide -- by Python.org

Consider the code given below:

import java.util.*;

public class Task {

public static final String descriptionPrefix = "description: ";

private String description;

private boolean important;

List<String> pastDescription = new ArrayList<>(); // a list of past descriptions

public Task(String d) {

this.description = d;

if (!d.isEmpty())

this.important = true;

}

public String getAsXML() { return "<task>"+description+"</task>"; }

/**

* Print the description as a string.

*/

public void printingDescription(){ System.out.println(this); }

@Override

public String toString() { return descriptionPrefix + description; }

}

In what ways the code violate the basic guidelines (i.e., those marked with one ⭐️) of the OSS-Generic Java Coding Standard given here?

Here are three:

descriptionPrefixis a constant and should be namedDESCRIPTION_PREFIX- method name

printingDescription()should be named asprintDescription() - boolean variable

importantshould be named to sound boolean e.g.,isImportant

There are many more.

Can follow intermediate style rules

Go through the provided Java coding standard and learn the intermediate style rules.

According to the given Java coding standard, which one of these is not a good name?

b

Explanation: checkWeight is an action. Naming variables as actions makes the code harder to follow. isWeightValid may be a better name.

Repeat the exercise in the panel below but also find violations of intermediate level guidelines.

Consider the code given below:

import java.util.*;

public class Task {

public static final String descriptionPrefix = "description: ";

private String description;

private boolean important;

List<String> pastDescription = new ArrayList<>(); // a list of past descriptions

public Task(String d) {

this.description = d;

if (!d.isEmpty())

this.important = true;

}

public String getAsXML() { return "<task>"+description+"</task>"; }

/**

* Print the description as a string.

*/

public void printingDescription(){ System.out.println(this); }

@Override

public String toString() { return descriptionPrefix + description; }

}

In what ways the code violate the basic guidelines (i.e., those marked with one ⭐️) of the OSS-Generic Java Coding Standard given here?

Here are three:

descriptionPrefixis a constant and should be namedDESCRIPTION_PREFIX- method name

printingDescription()should be named asprintDescription() - boolean variable

importantshould be named to sound boolean e.g.,isImportant

There are many more.

Here's one you are more likely to miss:

* Print the description as a string.→* Prints the description as a string.

There are more.

Can explain the need for good names in code

Proper naming improves the readability. It also reduces bugs caused by ambiguities regarding the intent of a variable or a method.

There are only two hard things in Computer Science: cache invalidation and naming things. -- Phil Karlton

Can improve code quality using technique: use nouns for things and verbs for actions

Every system is built from a domain-specific language designed by the programmers to describe that system. Functions are the verbs of that language, and classes are the nouns. ― Robert C. Martin, Clean Code: A Handbook of Agile Software Craftsmanship

Use nouns for classes/variables and verbs for methods/functions.

Examples:

| Name for a | Bad | Good |

|---|---|---|

| Class | CheckLimit |

LimitChecker |

| method | result() |

calculate() |

Distinguish clearly between single-valued and multivalued variables.

Examples:

Good

Person student;

ArrayList<Person> students;

Good

student = Person('Jim')

students = [Person('Jim'), Person('Alice')]

Can improve code quality using technique: use standard words

Use correct spelling in names. Avoid 'texting-style' spelling. Avoid foreign language words, slang, and names that are only meaningful within specific contexts/times e.g. terms from private jokes, a TV show currently popular in your country

Can improve code quality using technique: use name to explain

A name is not just for differentiation; it should explain the named entity to the reader accurately and at a sufficient level of detail.

Examples:

| Bad | Good |

|---|---|

processInput() (what 'process'?) |

removeWhiteSpaceFromInput() |

flag |

isValidInput |

temp |

If the name has multiple words, they should be in a sensible order.

Examples:

| Bad | Good |

|---|---|

bySizeOrder() |

orderBySize() |

Imagine going to the doctor's and saying "My eye1 is swollen"! Don’t use numbers or case to distinguish names.

Examples:

| Bad | Bad | Good |

|---|---|---|

value1, value2 |

value, Value |

originalValue, finalValue |

Can improve code quality using technique: not too long, not too short

While it is preferable not to have lengthy names, names that are 'too short' are even worse. If you must abbreviate or use acronyms, do it consistently. Explain their full meaning at an obvious location.

Can improve code quality using technique: avoid misleading names

Related things should be named similarly, while unrelated things should NOT.

Example: Consider these variables

colorBlack: hex value for color blackcolorWhite: hex value for color whitecolorBlue: number of times blue is usedhexForRed: : hex value for color red

This is misleading because colorBlue is named similar to colorWhite and colorBlack but has a different purpose while hexForRed is named differently but has very similar purpose to the first two variables. The following is better:

hexForBlackhexForWhitehexForRedblueColorCount

Avoid misleading or ambiguous names (e.g. those with multiple meanings), similar sounding names, hard-to-pronounce ones (e.g. avoid ambiguities like "is that a lowercase L, capital I or number 1?", or "is that number 0 or letter O?"), almost similar names.

Examples:

| Bad | Good | Reason |

|---|---|---|

phase0 |

phaseZero |

Is that zero or letter O? |

rwrLgtDirn |

rowerLegitDirection |

Hard to pronounce |

right left wrong |

rightDirection leftDirection wrongResponse |

right is for 'correct' or 'opposite of 'left'? |

redBooks readBooks |

redColorBooks booksRead |

red and read (past tense) sounds the same |

FiletMignon |

egg |

If the requirement is just a name of a food, egg is a much easier to type/say choice than FiletMignon |

Can explain the need for avoiding error-prone shortcuts

It is safer to use language constructs in the way they are meant to be used, even if the language allows shortcuts. Some such coding practices are common sources of bugs. Know them and avoid them.

Can improve code quality using technique: use the default branch

Always include a default branch in case statements.

Furthermore, use it for the intended default action and not just to execute the last option. If there is no default action, you can use the 'default' branch to detect errors (i.e. if execution reached the default branch, throw an exception). This also applies to the final else of an if-else construct. That is, the final else should mean 'everything else', and not the final option. Do not use else when an if condition can be explicitly specified, unless there is absolutely no other possibility.

Bad

if (red) print "red";

else print "blue";

Good

if (red) print "red";

else if (blue) print "blue";

else error("incorrect input");

Can improve code quality using technique: don't recycle variables or parameters

- Use one variable for one purpose. Do not reuse a variable for a different purpose other than its intended one, just because the data type is the same.

- Do not reuse formal parameters as local variables inside the method.

Bad

double computeRectangleArea(double length, double width) {

length = length * width;

return length;

}

Good

double computeRectangleArea(double length, double width) {

double area;

area = length * width;

return area;

}

Can improve code quality using technique: avoid empty catch blocks

Never write an empty catch statement. At least give a comment to explain why the catch block is left empty.

Can improve code quality using technique: delete dead code

We all feel reluctant to delete code we have painstakingly written, even if we have no use for that code any more ("I spent a lot of time writing that code; what if we need it again?"). Consider all code as baggage you have to carry; get rid of unused code the moment it becomes redundant. If you need that code again, simply recover it from the revision control tool you are using. Deleting code you wrote previously is a sign that you are improving.

Can improve code quality using technique: minimise scope of variables

Minimize global variables. Global variables may be the most convenient way to pass information around, but they do create implicit links between code segments that use the global variable. Avoid them as much as possible.

Define variables in the least possible scope. For example, if the variable is used only within the if block of the conditional statement, it should be declared inside that if block.

The most powerful technique for minimizing the scope of a local variable is to declare it where it is first used. -- Effective Java, by Joshua Bloch

Resources:

Can improve code quality using technique: minimise code duplication

Code duplication, especially when you copy-paste-modify code, often indicates a poor quality implementation. While it may not be possible to have zero duplication, always think twice before duplicating code; most often there is a better alternative.

This guideline is closely related to the

Supplmentary → Principles →

DRY (Don't Repeat Yourself) Principle: Every piece of knowledge must have a single, unambiguous, authoritative representation within a system The Pragmatic Programmer, by Andy Hunt and Dave Thomas

This principle guards against duplication of information.

The functionality implemented twice is a violation of the DRY principle even if the two implementations are different.

The value a system-wide timeout being defined in multiple places is a violation of DRY.

Can explain the need for commenting minimally but sufficiently

Good code is its own best documentation. As you’re about to add a comment, ask yourself, ‘How can I improve the code so that this comment isn’t needed?’ Improve the code and then document it to make it even clearer. --Steve McConnell, Author of Clean Code

Some think commenting heavily increases the 'code quality'. This is not so. Avoid writing comments to explain bad code. Improve the code to make it self-explanatory.

Can improve code quality using technique: do not repeat the obvious

If the code is self-explanatory, refrain from repeating the description in a comment just for the sake of 'good documentation'.

Bad

// increment x

x++;

//trim the input

trimInput();

Can improve code quality using technique: write to the reader

Do not write comments as if they are private notes to self. Instead, write them well enough to be understood by another programmer. One type of comments that is almost always useful is the header comment that you write for a class or an operation to explain its purpose.

Examples:

Bad Reason: this comment will only make sense to the person who wrote it

// a quick trim function used to fix bug I detected overnight

void trimInput(){

....

}

Good

/** Trims the input of leading and trailing spaces */

void trimInput(){

....

}

Bad Reason: this comment will only make sense to the person who wrote it

# a quick trim function used to fix bug I detected overnight

def trim_input():

...

Good

def trim_input():

"""Trim the input of leading and trailing spaces"""

...

Can improve code quality using technique: explain what and why, not how

Comments should explain what and why aspect of the code, rather than the how aspect.

What : The specification of what the code supposed to do. The reader can compare such comments to the implementation to verify if the implementation is correct

Example: This method is possibly buggy because the implementation does not seem to match the comment. In this case the comment could help the reader to detect the bug.

/** Removes all spaces from the {@code input} */

void compact(String input){

input.trim();

}

Why : The rationale for the current implementation.

Example: Without this comment, the reader will not know the reason for calling this method.

// Remove spaces to comply with IE23.5 formatting rules

compact(input);

How : The explanation for how the code works. This should already be apparent from the code, if the code is self-explanatory. Adding comments to explain the same thing is redundant.

Example:

Bad Reason: Comment explains how the code works.

// return true if both left end and right end are correct or the size has not incremented

return (left && right) || (input.size() == size);

Good Reason: Code refactored to be self-explanatory. Comment no longer needed.

boolean isSameSize = (input.size() == size) ;

return (isLeftEndCorrect && isRightEndCorrect) || isSameSize;

2B. Feature Quality

Evaluates: how deep is your biggest feature, how complete is the feature, how well it solves the target user needs, etc.

Evaluation method:

- by peers from own team and other teams (based on peer testing session, PPP, RepoSense data) -- more weight for team members from own team than those from other teams

- by tutors (based on demo,

PPP , RepoSense data)

For reference, here are some grading instructions that will be given to peers/tutors grading this aspect:

Evaluate the biggest feature done by the student for difficulty, completeness, and testability. Note: examples given below assume that AB4 did not have the commands

edit,undo, andredo.

- Difficulty

Feature/ difficulty: unable to judge: You are unable to judge this aspect for some reason.Feature/ difficulty: low: e.g. make the existing find command case insensitive.Feature/ difficulty: medium: e.g. an edit command that requires the user to type all fields, even the ones that are not being edited.Feature/ difficulty: high: e.g., undo/redo command- Completeness

Feature/ completeness: unable to judge: You are unable to judge this aspect for some reason.Feature/ completeness: low: A partial implementation of the feature. Barely useful.Feature/ completeness: medium: The feature has enough functionality to be useful for some of the users.Feature/ completeness: high: The feature has all functionality to be useful to almost all users.- Other

Feature/ not hard to test: The feature was not too hard to test manually.Feature/ polished: The feature looks polished (as if done by a professional programmer).Evaluate the amount of work, on a scale of 0 to 30.

- Consider this PR (

historycommand) as 5 units of effort which means this PR (undo/redocommand) is about 15 points of effort. Given that 30 points matches an effort twice as that needed for theundo/redofeature (which was given as an example of anAgrade project), we expect most students to be have efforts lower than 20.- Count all implementation/testing/documentation work as mentioned in that person's PPP. Also look at the actual code written by the person.

- Do not give a high value just to be nice. You will be asked to provide a brief justification for your effort estimates.

3. Project Assessment: QA [ 2 + 10 = 12 marks]

3A. System/Acceptance Testing:

Evaluates: How well you can system-test/acceptance-test a product

Based on:

- bugs you found in the Practical Exam

- our own manual testing (when necessary)

Evaluated by: teaching team (in the capacity of moderators e.g., to verify if it is reasonable for you to reject a bug reported by others)

3B. Developer Testing:

Evaluates: How well you tested your own feature

Based on:

- bugs in your work found by others during the PE

- your test code (note

our expectations for automated testing )

Evaluated by: tutors (in the capacity of moderators e.g., to verify if it is reasonable for the team to downgrade the severity of a bug you reported)

- There is no requirement for a minimum coverage level. Note that in a production environment you are often required to have at least 90% of the code covered by tests. In this project, it can be less. The less coverage you have, the higher the risk of regression bugs, which will cost marks if not fixed before the final submission.

- You must write some tests so that we can evaluate your ability to write tests.

- How much of each type of testing should you do? We expect you to decide. You learned different types of testing and what they try to achieve. Based on that, you should decide how much of each type is required. Similarly, you can decide to what extent you want to automate tests, depending on the benefits and the effort required.

Notes on how marks are calculated based on bugs

- Bugs rejected by the dev team, if the rejection is approved by the teaching team, will not be affect marks of the tester or the developer.

- The penalty/credit for a bug varies based on,

- The severity of the bug:

severity.High>severity.Medium>severity.Low - The type of the bug:

type.FunctionalityBug>type.DocumentationBug>type.FeatureFlaw>type.DocTypo

- The severity of the bug:

- The penalty for a bug is divided equally among assignees.

- The developers are not penalized for the duplicate bug reports they received but the testers earn credit for the duplicate bug reports they submitted as long as the duplicates are not submitted by the same tester.

-

Obvious bugs earn slightly less credit for the tester and slightly more penalty for the developer. - If the team you tested has a low bug count i.e., total bugs found by all testers is low, we will fall back on other means (e.g., performance in mock PE) to calculate your marks for system/acceptance testing.

- Your marks for developer testing depends on the bug density rather than total bug count. Here's an example:

nbugs found in your feature; it is a difficult feature consisting of lot of code → 4/5 marksnbugs found in your feature; it is a small feature with a small amount of code → 1/5 marks

- You don't need to find all bugs in the product to get full marks. For example, finding half of the bugs of that product or 4 bugs, whichever the lower, could earn you full marks.

- Excessive incorrect downgrading/rejecting/

de-duplicating , if deemed an unethical attempt to game the system, may be penalized.

4. Project Assessment: Documentation [ 10 marks]

Evaluates: the quality of the sections you wrote for the user guide and the developer guide

Based on: the relevant sections of your PPP.

Feedback: Submit your UG in v1.1 amd your DG in v 1.3 to allow the tutors to provide feedback on these documents. This will give you a chance to improve for your final submission.

Evaluated by: tutors, peers from own team and other teams

For reference, here are some grading instructions that will be given to peers/tutors grading this aspect:

Evaluate the quality of user documentation based on the parts of the user guide written by the person, as reproduced in the project portfolio. Evaluate from an end-user perspective.

UG/ unable to judge: Less than 1 page worth of UG content written by the student or cannot find PPPUG/ good use of visuals: Uses visuals e.g., screenshots.UG/ good use of examples: Uses examples e.g., sample inputs/outputs.UG/ just enough information: Not too much information. All important information is given.UG/ easy to understand: The information is easy to understand for the target audience.UG/ polished: The document looks neat, well-formatted, and professional.Evaluate the quality of developer documentation based on the developer docs cited/reproduced in the respective project portfolio page. Evaluate from the perspective of a new developer trying to understand how the features are implemented.

DG/ unable to judge: Less than 0.5 pages worth of content OR other problems in the document e.g. looks like included wrong content.DG/ too little: 0.5 - 1 page of documentation- Diagrams:

DG/ types of UML diagrams: 1: Only one type of diagram used (types: Class Diagrams, Object Diagrams, Sequence Diagrams, Activity Diagrams, Use Case Diagrams)DG/ types of UML diagrams: 2: Two types of diagrams usedDG/ types of UML diagrams: 3+: Three or more types of diagrams usedDG/ UML diagrams suitable: The diagrams used for the right purposeDG/ UML notation correct: No more than one minor error in the UML notationDG/ diagrams not repetitive: No evidence of repeating the same diagram with minor differencesDG/ diagrams not too complicated: Diagrams don't cram too much information into themDG/ diagrams integrates with text: Diagrams are well integrated into the textual explanationsDG/ easy to understand: The document is easy to understand/followDG/ just enough information: Not too much information. All important information is given.DG/ polished: The document looks neat, well-formatted, and professional.

5. Project Assessment: Project Management [ 5 + 5 = 10 marks]

5A. Process:

Evaluates: How well you did in project management related aspects of the project, as an individual and as a team

Based on: tutor/bot observations of project milestones and GitHub data

Milestones need to be reached the midnight before of the tutorial for it to be counted as achieved. To get a good grade for this aspect, achieve at least 60% of the recommended milestone progress.

Other criteria:

- Good use of GitHub milestones

- Good use of GitHub release mechanism

- Good version control, based on the repo

- Reasonable attempt to use the forking workflow

- Good task definition, assignment and tracking, based on the issue tracker

- Good use of buffers (opposite: everything at the last minute)

- Project done iteratively and incrementally (opposite: doing most of the work in one big burst)

5B. Team-tasks:

Evaluates: How much you contributed to team-tasks

Based on: peer evaluations, tutor observations

To earn full marks, you should have done a fair share of the team tasks. You can earn bonus marks by doing more than your fair share.

Relevant: [

Here is a non-exhaustive list of team-tasks:

- Necessary general code enhancements e.g.,

- Work related to renaming the product

- Work related to changing the product icon

- Morphing the product into a different product

- Setting up the GitHub, Travis, AppVeyor, etc.

- Maintaining the issue tracker

- Release management

- Updating user/developer docs that are not specific to a feature e.g. documenting the target user profile

- Incorporating more useful tools/libraries/frameworks into the product or the project workflow (e.g. automate more aspects of the project workflow using a GitHub plugin)